✨If only there was an easy way to ask customers what they think. Preferably low-cost and fast. And scalable, maybe.

[revelation bulb 💡] There are surveys.

And if you have ever looked into the topic, you probably know there’s quite a number of types of surveys out there. So, how do you navigate around them?

This article simplifies the choice of survey research methods to help you align them with specific goals and secure trustworthy findings. It is designed for anyone collecting data.

We will explore different survey types, their intended purposes, and practical advice for their use. After reading, you'll clearly understand how to apply survey methods to gather and interpret valuable feedback effectively.

1, 2, 3 survey!

What is a survey and survey research?

A survey is a research method consisting of a series of questions aimed at extracting specific data from a particular group of people. It is widely used across various fields, such as marketing, social science, and public health, to uncover trends, attitudes, and behaviors.

Surveys can provide quantitative data—numerical information that can be analyzed statistically—as well as qualitative insights, which delve into the reasoning behind certain trends or opinions.

Survey research is a method of collecting data from individuals to gather information and insights.

The survey research process involves selecting a sample that represents a larger population, formulating questions designed to collect clear responses, and distributing the survey through one of several methods, including online, by mail, or in person.

10 benefits of survey research

Survey research offers a range of benefits, making it a popular method in various fields, such as social sciences, marketing, health, and public policy. Here are some of the key potential benefits:

1. Cost-effectiveness

Surveys can be relatively inexpensive, especially when conducted online or via email. They allow you to collect data from large samples without the high costs associated with other methods, like user research interviews.

Modern survey tools make it easy for anyone, including those with limited research experience, to design and distribute surveys, democratizing the research process.

2. Large sample sizes = more reliable results

As your research sample sizes increase, the reliability and accuracy of the data also improve, leading to more trustworthy results and stronger conclusions.

Surveys can reach large numbers of respondents, increasing the generalizability of the results. It is particularly important for studies aiming to make inferences about larger audiences.

When drawing conclusions based on Survicate data, the sample size is usually in the thousands, which gives us confidence in the positive impact these changes will have on our customers.

Glen Hamilton, Senior Director of Digital Growth at Fortive

3. Flexibility

You can distribute surveys in many different ways. Online surveys alone can be distributed via email or link, as website pop ups, in-product, or in a mobile app. This flexibility allows you to choose the best method for your target audience and research objectives.

We use almost all available channels. We don't send letters with NPS yet, but maybe we'll get there one day 😅

Krzysztof Szymański, Former Head of CRM at Taxfix

4. Quantifiable data and trend analysis

Surveys often produce quantitative data, which can be easily analyzed using analytics tools. It allows you to create objective comparisons, establish metrics and KPIs, and build trendlines.

By conducting surveys at different points in time, you can track changes in opinions, behaviors, or other variables, allowing for trend analysis over time.

💡A recurrent NPS survey is a great tool to build a sentiment trendline.

You need to start somewhere and then see the baseline. Our business depends on the weather, so our users are probably less satisfied on rainy days. It’s quite the opposite when it’s sunny. We can see this in the surveys, but we needed a year or two to establish a baseline. User satisfaction went up in summer and down a bit in winter. Now we know it’s a normal trend.

Falco Kübler, Senior Product Owner at wetter.com

5. Time-efficiency

Surveys can be designed to be quick to complete, minimizing the time burden on respondents. They also allow researchers to gather data in a relatively short time frame compared to other methods like longitudinal studies.

There’s a sports brand for which we can get enough responses with very high statistical significance within just three or four hours. People respond because they want to and because it's something they really are involved in.

Patricia Caldas, UXR Manager at Medialivre

6. Wide geographic reach

Surveys, especially online ones, can be distributed across different regions and even globally, making it possible to collect data from diverse populations.

💡If your audience is international, consider using multilingual surveys that get translated automatically depending on the language your respondents set for their browser.

7. Versatility in question design

Surveys can include a mix of question types, such as multiple-choice, Likert scales, open-ended questions, and more. This way, you can explore different aspects of a topic and collect a rich data set.

Additionally, they can be tailored to specific research needs for targeted data collection on particular issues, demographics, or sectors.

We have implemented Survicate [surveys] to get user feedback in a given context. So, as opposed to the more standard way of fielding surveys, sending an email to invite users to answer several questions, Survicate allows us to get feedback on the particular experience of the site or the app as the user is experiencing it, which makes the answers much more contextual and accurate.

Sandrine Veillet, VP of Global Product at Medscape

8. Anonymity

You can design anonymous surveys, encouraging more honest and accurate responses, especially for sensitive topics.

💡Although at Survicate we usually recommend identifying your respondents, there are cases in which anonymity can work better. It can, for example, reduce social desirability bias, where respondents might otherwise provide answers they think are more socially acceptable.

9. Capability to handle complex questions

Surveys can include complex question designs, for example, by using question logic, which can help delve deeper into the respondents' reasoning.

💡For example, Medscape, a global online portal for physicians and healthcare professionals, used email surveys to test its hypothesis regarding a potential development in the brand’s content base.

It was complex research, but it was managed with an unmoderated survey and specially designed survey logic that first tested whether the participants were properly prepared to take part in the survey research.

10. Ease of analysis

If you decide to use customer feedback software, it will easily process and analyze survey data, enabling you to gain insights quickly and efficiently.

Survicate's built-in analytics dashboard shows survey results in real time, automatically measuring Net Promoter (NPS), customer satisfaction (CSAT), or customer effort (CES) scores.

Moreover, you can categorize your qualitative feedback with Insights Hub and ask additional questions to a chat-based Research Assistant that will draw answers from the available feedback.

How to choose the right survey method?

When deciding on the best survey method for your needs, take into account the following factors:

Research objectives

Clearly define what you want to achieve with your survey. Different goals require different survey approaches.For example, you will use a different survey design and distribution methods for a recurrent relational NPS survey and for a brand awareness survey.

Data type required

The type of data you’re after is directly related to your survey goals. Decide if you need quantitative data, which is easily obtained through structured surveys with closed-ended questions, or qualitative data, which may necessitate more open-ended questions and discussions.

Target audience

Identify where your audience is most likely to be reached and consider their preferred mode of communication. It may determine the distribution method you’ll choose—if the audience you’re trying to reach spends most of their time inside your product, sending an email survey will be ineffective.

The reason we have implemented Survicate is the ability to get user feedback in a given context. So, as opposed to the more standard way of fielding surveys, sending an email to invite users to answer several questions, Survicate allows us to get feedback on the particular experience of the site or the app as the user is experiencing it, which makes the answers much more contextual and accurate.

Sandrine Veillet, VP of Global Product at Medscape

It’s also important to choose a tool that offers advanced targeting options that allow you to reach a very particular group.

💡For example, Survicate allows you to create user segments based on various parameters such as the number of visits, visit source, language, attributes, and many more. You can also include up to 50 filters based on which you'd like to segment your audience.

Time availability

Choose a method that fits within your timeline. Online surveys provide quicker results than traditional mail surveys.

What types can research surveys take?

Surveys can be distributed through various channels, each with its own set of advantages. Understanding the different types of survey distribution methods can help you select the most effective approach for your research needs.

Let's explore the types of surveys based on how you distribute them.

Email surveys

Email surveys are sent directly to participants' inboxes. It is convenient for recipients, allowing them to respond whenever they want.

With this method, you should target your campaign well to reach individuals who have already engaged with your brand or service.

To improve response rates, make sure your survey tool collects partial responses. Then, embed your surveys inside emails and keep the design on-brand.

Email surveys are perfect for longer, more detailed surveys, but also the complete opposite—general surveys detached from your product context (recurrent NPS surveys could be an example).

The NPS is an email survey because we don't want it to pop up on the platform and be connected with a random user action. We aim for general user feedback. We send it as an email because it allows us to customize the survey a bit more. So we can ask for a lot more detailed feedback. And it's much easier if we have it as a whole page instead of just in-product.

Alessa Fleischer, Product Manager at Workwise

In-product surveys

In-product surveys are embedded directly within your service or application. They capture customer feedback at the moment of user interaction, which can lead to more accurate and actionable insights.

This survey method is less intrusive and benefits from high engagement rates as it is part of the natural user experience.

💡For example, Workwise’s product teams use in-product surveys to capture contextual feedback from users while they’re engaged with the product. They want to know if users like the information presented to them, if anything’s missing, or what they’d like to see there.

Workwise targets certain aspects of the page, URLs, and user actions.

Website surveys

Website surveys are a type of online surveys that can take the form of pop-ups, website widgets, or embedded questionnaires on a webpage.

They are useful for capturing the opinions of site visitors in real time, providing insights into user experience and satisfaction.

We recommend keeping website surveys as micro-surveys with a maximum of three questions to ensure effectiveness without disrupting the browsing experience.

At least once a week, we survey our audience on topics that concern them, quickly turning the opinions they share into news often picked up by other national news outlets. This way, users know that by responding to our surveys, their opinions are valued and amplified. It creates additional value around our brand and our community.

Simone Franconieri, Head of Product at Skuola.net

Link surveys

You can share link surveys across multiple platforms, including social media, SMS, or digital workspaces.

This online survey method offers flexibility in reaching a wider audience and can be used to gather a diverse range of responses. It's important to track which platforms get the best response rates to optimize future survey distributions.

💡For example, Prowly used a Survicate link survey to conduct research for their thought leadership report. As a PR platform, Prowly distributed the link via different channels, such as their own social media and the company’s media database. They also shared it with PR communities on Slack, and posted it as a HARO request, a popular tool for media professionals.

Mobile app surveys

With mobile surveys, you can easily collect in-app feedback. They should be brief and optimized for mobile interfaces to fit smaller screens and on-the-go lifestyles.

Choose mobile survey software with a simple design and straightforward navigation to maximize engagement.

Don’t forget the essential survey distribution rule of thumb: use mobile surveys if your target audience spends most of their time in your mobile app.

💡For example, Taxfix discovered that around 75% of their customers use a mobile app to do taxes. That’s why mobile app surveys are Taxfix’s main distribution channel.

Other survey distribution channels

In-person interviews

Face-to-face user research interviews or in-person surveys can provide comprehensive and nuanced information, while body language and tone of voice add even more context.

💡 They are highly interactive but can be costly and time-consuming. To make sure such interview is efficient and focused, prepare a structured interview template.

Paper surveys

Paper surveys are traditional tools and can be useful in environments lacking digital access. They do not require internet connectivity, but data entry and analysis for a paper survey research can be labor-intensive.

💡To manage this, create questions that are easy to process and analyze from collected paper surveys.

Phone surveys

You can achieve a more personal touch with a telephone survey and clarify any ambiguities in real -time. However, they require trained interviewers and may not reach respondents who favor communication via text or email.

💡Make sure questions are direct and the call script is standardized to maintain consistency across telephone surveys.

Kiosk surveys

Kiosk surveys are interactive, often touch-screen questionnaires placed in high-traffic areas in a survey kiosk, allowing for immediate feedback. They are ideal for capturing real-time retail customer experience when it happens, such as in stores or service centers.

Focus groups

Focus groups are small, diverse groups of people whose reactions to specific topics are studied. Moderators lead discussions to gain deep insights into participant attitudes and perceptions, making it a qualitative method valuable for exploring complex issues.

Panel surveys

A panel survey involves a pre-recruited group of individuals who agree to participate in multiple surveys over a period of time. This method ensures a reliable sample for longitudinal studies, helping you track changes in opinions or user behavior among the same set of respondents.

Types of survey questions

When designing a survey, your questions can make or break the data you collect. It is vital to understand the different question types and when to use them to gather meaningful insights effectively.

Closed-ended questions

Closed-ended questions are designed to elicit a specific response, such as "yes" or "no," a numerical rating, or a choice from a set list of options. They are quantifiable, which makes them easy to analyze.

They include, for example, single- and multiple-choice questions, and rating scales.

Best for: quick, concise, and quantifiable data collection.

Open-ended questions

Open-ended questions allow respondents to answer in their own words, providing richer, more nuanced information. This format is less restrictive and can offer insights that closed-ended questions might miss. Using open-ended questions can be invaluable for understanding the reasons behind behaviors or opinions, but the data can be more challenging to analyze due to its qualitative nature.

It doesn’t have to be, though.

💡With feedback management tools such as Insights Hub, you can have all your feedback categorized and analyzed automatically by a specially trained AI model. It dramatically cuts down the time necessary for qualitative feedback analysis.

Complementary to Insights Hub is the Research Assistant, an AI-based chat where you can ask all the questions you may have about your customers.

Best for: exploring deeper insights, understanding reasons behind behaviors, and collecting qualitative data that reveals for example: feelings, motivations, and experiences.

Mixed-format questions

Mixed-format questions combine elements of both open and closed-ended questions. They might start with a closed-ended question and then offer an "Other" option where respondents can elaborate.

This hybrid approach provides the structured data of closed-ended questions with the depth of open-ended ones, making it a versatile choice for complex topics. Mixed-format questions enable you to gather a wide range of data without limiting respondent expression.

Best for: gathering both structured data and detailed insights

Types of surveys based on frequency

Surveys can be categorized by how often they are conducted. This frequency affects the type of data collected and the insights that can be drawn.

Cross-sectional surveys

Cross-sectional surveys are snapshots of a population at a specific point in time. They help understand current attitudes or behaviors but do not track changes over time.

These surveys are often used to measure how common certain outcomes or behaviors are. They help us understand how different things might be related and guide decisions in public policy or business. However, they don't prove one thing causes another—they only show connections between things.

💡For example, you might use a cross-sectional survey to assess consumer preferences for a new product. By surveying a sample of potential customers at one point in time, you can gather data on how the product is perceived, what features are most desired, and what price points are considered acceptable.

Longitudinal surveys

In contrast, longitudinal surveys are conducted repeatedly over an extended period. They can be further broken down into:

- Trend surveys: measure changes over time within a population, where different individuals may be surveyed in each wave.

- Cohort surveys: follow a specific sub-group or cohort with the same characteristics over time, observing how their responses change.

- Panel surveys: Similar to cohort surveys, panel surveys involve repeatedly surveying the same individuals over time, allowing for detailed tracking of individual changes. The difference is they don’t have to have shared characteristics.

The choice between cross-sectional and longitudinal surveys depends on whether your research aims to capture a momentary picture or observe trends and developments. Each type offers unique benefits and should align with your specific research objectives.

What can you do with different types of surveys?

Surveys are powerful tools to understand the market, customers, and products. When used effectively, they can help you make strategic decisions and 🤞 drive growth.

Some of the most popular use cases for using surveys in business are:

Customer Experience

Customer experience surveys are essential for gauging satisfaction and identifying areas to improve customer loyalty.

Net promoter Score

The Net Promoter Score (NPS) survey measures customer loyalty and overall satisfaction. It is perfect for building a trendline and can be one of the indicators of business growth.

Try our free NPS survey template ⤵️

The classic NPS question usually goes like this: How likely are you to recommend us to your friend or colleague? Respondents choose from 0 to 10 on a scale, falling into one of three groups:

🟢 Promoters (score 9-10): These are highly satisfied and loyal customers who are enthusiastic about the company, product, or service. They are likely to recommend it to others and contribute to positive word-of-mouth marketing.

🟡Passives (score 7-8): These customers are generally satisfied but not particularly enthusiastic. They may be vulnerable to competitive offerings and are less likely to actively promote the company, product, or service.

🔴 Detractors (score 0-6): These customers are unhappy or dissatisfied with the company, product, or service. They may spread negative feedback, damaging the brand's reputation and potentially discouraging others from engaging with the company.

To calculate NPS, you need to subtract the percentage of detractors from the percentage of promoters.

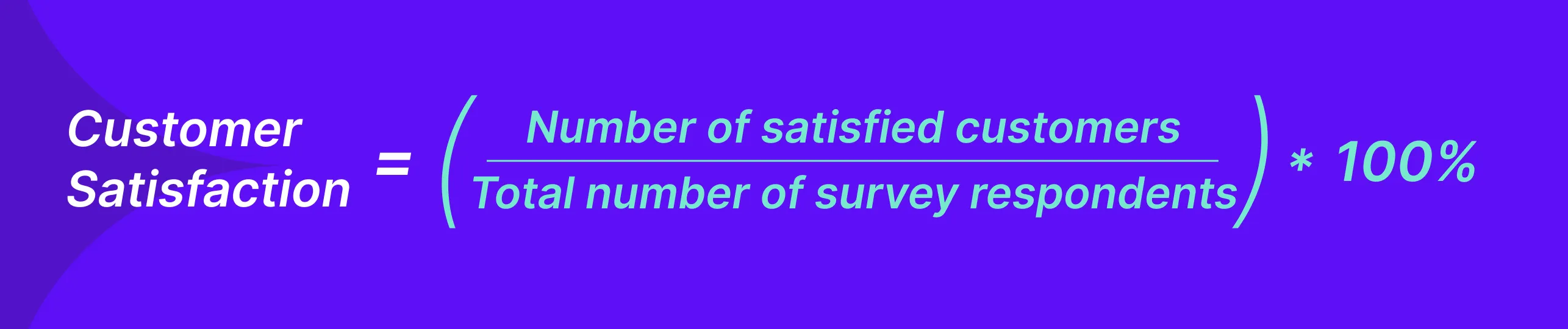

Customer Satisfaction Score (CSAT)

Customer Satisfaction survey (CSAT) allows you to obtain immediate feedback on customer satisfaction with a product, service, or interaction.

Try our free CSAT survey template ⤵️

The survey question usually asks something like, "How satisfied were you with your experience?" or "How would you rate your overall satisfaction with the product/service?"

To calculate CSAT, you need to take the number of positive responses (e.g., 4 and 5 on a 5-point scale), divide it by the total number of responses, and multiply by 100 to get a percentage.

Product research

Product research is a way to learn about what people want and need in a product. It involves gathering and analyzing information to help make smart choices about creating or improving a product or figuring out the best way to market it.

Product surveys are an important element of this kind of research. They allow you to collect user feedback on your offerings, guiding product development and feature optimization.

Microsurveys

Microsurveys should be your first thought when starting product research inside your product. These are short surveys consisting of a maximum of three to four questions that your app users can answer in seconds rather than minutes.

Why? Well, although you want to catch your respondents while using your product, you don’t want to disrupt their user experience at the same time.

Microsurveys are non-intrusive and can capture contextual feedback. That’s why they’re a great choice for evaluating new features or recruiting users for an interview.

Product feature prioritization survey

Product feature prioritization survey collects insights into customers' desired features or improvements.

It helps to validate your hypotheses regarding roadmap prioritization with objective quantitative research. A feature prioritization survey can also be helpful in tying your development plans to business objectives and risks (will people use it?).

Try our free product feature prioritization survey template ⤵️

Product use and satisfaction survey

A product use and satisfaction survey is about understanding how your users feel about different parts of your software. It's designed to see which features are working well and which ones might need some attention, helping you make informed decisions about improving your product.

After all, the success of your software depends on how happy your users are.

Try our free software evaluation survey template ⤵️

Market research

Market research evaluates the market size, trends, competitive landscape, and customer demographics. It will help you assess whether your product has a chance to succeed on the intended market, identify opportunities, and optimize your overall product and marketing strategies.

Market research survey

Market research surveys are handy tools for understanding your audience and the surrounding market. They provide critical insights for making better decisions and strategic plans.

Try our free market research survey template ⤵️

Customer segmentation survey

A customer segmentation survey is a tool for dividing customers into specific groups or segments based on shared characteristics or behaviors.

These segments can be used to personalize content and communication with different audiences in a way that is more appealing to them.

Try our free customer segmentation survey template ⤵️

Exit intent

Exit intent surveys or exit surveys reveal the reasons behind user departures, providing actionable insights to reduce churn rates.

Website exit survey

A website exit intent survey shows up on your page when a visitor is about to leave it. Usually, it’s triggered when a person moves the cursor above a certain line (for Survicate, it’s 20px below the top of the page), or based on fast mouse movements to the top of the page.

Discovering why visitors leave without converting can help you identify and address potential issues.

Try our free website exit intent survey template ⤵️

Customer churn survey

Churn survey is a valuable tool for learning the reasons why customers leave your product. Even though the survey itself will not turn the tide, learning about the weakest aspect of your products can help you improve. Ultimately, it is a proper element of any retention strategy.

Try our free customer exit survey template ⤵️

Run online surveys with Survicate

Choosing the right survey method is crucial for gathering useful data. Survicate offers a user-friendly survey platform that allows you to create and distribute surveys through email, on your website, in your product, and even on mobile devices.

With Survicate, you can easily collect feedback and turn it into insights that can help improve your business. Whether gauging customer satisfaction or adjusting product features, this tool assists you in making informed decisions. It's straightforward to use and designed to provide valuable information efficiently.

So, why not give it a try? Sign up now, and take advantage of Survicate's 10-day free trial that unlocks all the Best Plan features. It's time to uncover the insights to steer your strategies toward success.