Figuring out if your customer satisfaction metrics show success or failure is tricky.

Unlike MRR or retention rate, they don’t directly correlate with revenue. And when wins and losses aren’t straightforward, deciding your thresholds and targets can be difficult.

This is why the question of “What is a good Net Promoter Score?” is so hard to answer.

Sure, we all want a high NPS. It indicates strong customer loyalty and growth potential and serves as a market differentiator. But how do you determine at which number the “high NPS” begins – is it 30, 70, or something else altogether?

In this article, we’ll help you figure out what a good Net Promoter Score means for your company. Armed with this knowledge, you’ll make sense of your survey results and make sure NPS is actionable.

That being said, at Survicate we don’t believe in getting fixated on your score. As long as you listen to your customers and let their feedback fuel your growth, you’re on the right track.

We’ll throw in a few tips on what to do with your NPS survey results to make the most out of them – and ensure your next score is better than your last.

Sounds good? Read on!

First Things First: What is the Net Promoter Score (NPS)?

If you’re only just diving into the world of Net Promoter Score, here’s a quick summary of what it’s all about.

Net Promoter Score (NPS) is a customer satisfaction metric that measures your clients’ loyalty to your brand. It helps predict company growth and customer lifetime value.

To measure your Net Promoter Score, you need to send out a survey that asks a simple question:

“How likely are you to recommend [our company] to a friend or colleague?”

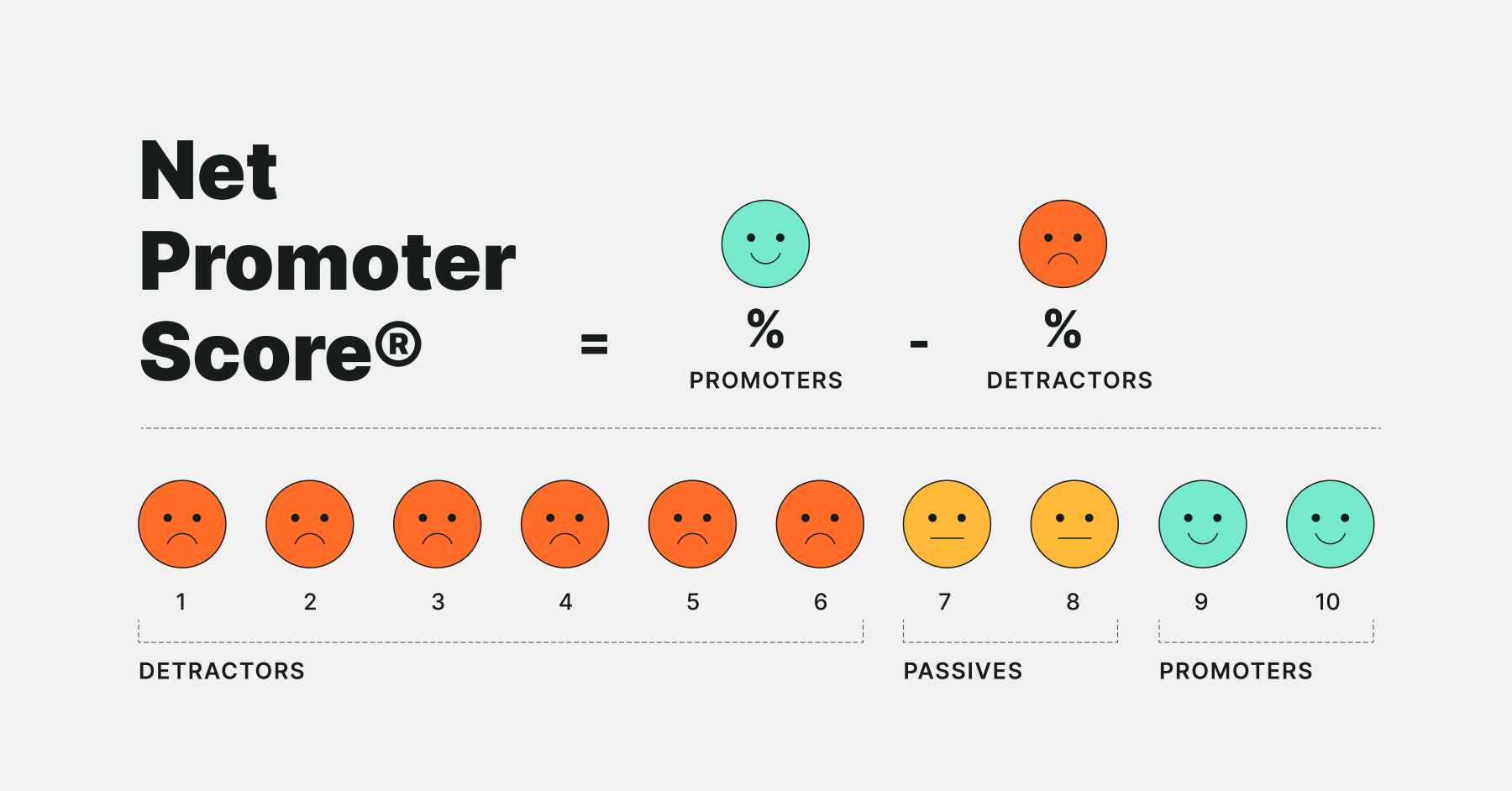

The survey participants can choose their answers on a 0-10 rating scale.

Once the survey is complete, the respondents are categorized into the following groups:

- Promoters: The respondents who chose the scores of 9 or 10. They’re your biggest fans, most likely to recommend you to their peers.

- Passives: The respondents who chose the scores of 7 or 8. They’re the most lukewarm client group. If you don’t act to win them over, they might switch to your competitors.

- Detractors: the respondents that chose the scores of 0 to 6. They’re your unsatisfied customers who are likely to churn and spread negative word of mouth about you.

To calculate your Net Promoter Score, you need to subtract the percentage of detractors from the percentage of promoters. For instance, if 50% of your survey respondents are promoters and 30% are detractors, the NPS will be 20. The scores range from -100 to 100.

However, no good Net Promoter Score survey is complete without follow-up open-ended questions. They let the respondents explain their initial choice and freely share their feedback. They also provide context to each score and let you take action – for example, if many detractors mention flaws in your customer service, you’ll know to focus on that first.

What Is a Good Net Promoter Score, Then?

Which scores between the -100 and +100 can be considered good, then?

As you’ve probably expected – it depends.

There are two main approaches to Net Promoter Score: absolute and relative. Let’s discuss them in more detail.

Absolute NPS method

.svg)

Just like the name suggests, the absolute NPS method assumes there’s an objective “good” (and “bad”) score that you can benchmark your own score against.

Here’s what the scale looks like:

- -100-0: You have more detractors than promoters, which is a bad sign for any business. There’s a chance negative opinions about your company exceed recommendations. You should immediately improve customer satisfaction, especially if you’re in a very competitive market.

- 0-30: you have more fans than haters, which is positive. But there’s a lot you still need to improve to consider yourself successful.

- 30-70: this is where the most beloved companies live. If you’re in this range, especially if you've crossed the threshold of 50, you most likely have a loyal customer base and generate a lot of positive word of mouth. However, remember there’s always room for improvement. You have to keep delivering an impeccable customer experience.

- 70+: if you cross the magical threshold of 70, you’re the cream of the crop. Your customer experience program is stellar and heading in the right direction, your customers love you, and you can pat yourself on the back. However, a score this high is rarely attainable, and you shouldn’t obsess over achieving it.

Assessing your Net Promoter Score based on the absolute method is easy and straightforward, which makes it a great introduction to the NPS landscape for beginners.

However, the absolute method doesn’t tell you much about actual growth opportunities. What really matters for any business is that a customer chooses it over its competitors.

Cue: the relative NPS method.

Relative NPS method

The relative NPS method assumes comparing your score to other companies in your industry. You can discover how you stack up against your competitors and see how much customer satisfaction matters in your niche.

How to check the Net Promoter Scores of your competitors? Many companies share their scores freely, and you can find them on websites.

We also recommend you check out Survicate’s 2023 NPS benchmark report, especially if you’re a Survicate user – this way, you can compare your scores to other companies with similar survey methodology.

Here’s why the relative method is more reliable than the absolute method:

- Net Promoter Scores vary greatly from niche to niche. Benchmarking your score against direct competitors will let you set realistic goals.

- You’ll determine if your customer experience gives you a competitive advantage. If you’re continuously scoring better than your top competitors, turn your high Net Promoter Score into a USP (Unique Selling Proposition).

- Sometimes, sudden drops or raises in your Net Promoter Score are the fault of outside factors, not your actions. Monitoring industry averages will let you figure out if that’s the case.

- You’ll know if customer loyalty and satisfaction matter in your industry. Some industries have notoriously low average NPS. Companies such as Internet and cable providers or construction firms usually score low – but they’re still successful. Maybe it’s time to look for your growth opportunities somewhere else?

However, the relative method is also not without its flaws:

- As mentioned above, some industries have very low average Net Promoter Scores. If that’s the case for you, benchmarking your score against competitors might be a trap. Don’t be satisfied with low NPS just because it’s still favorable in comparison. If you ignore customer experience, you risk getting upstaged by a competitor that does it better.

- High NPS doesn’t automatically mean you have an advantage over your competitors if they offer something you don’t – so chasing their benchmarks might be pointless. KFC, for example, has a low average NPS of 14. But unless you’re able to replicate their addictive chicken wings and compete with a powerful brand name, you won’t steal their clients even with an NPS of 100.

- Differences in survey methodologies make NPS benchmarks not fully reliable. If you’re a small SaaS that surveyed 100 customers, you shouldn’t compare yourself to a company with a team of researchers meticulously tracking every success metric. When browsing benchmarks and reports, always check information on the dataset and survey methodology.

Keeping up with industry averages will help you stay on top of your niche and improve market research. Still, we strongly recommend combining this method with benchmarking your own NPS and focusing on continuous improvement.

Benchmarking your own NPS

The most important thing about customers’ satisfaction is to keep getting better. As long as your customers' opinions about you – and, as a result, your Net Promoter Score – keep getting better, you’re on the right track.

Here’s why we recommend benchmarking your own NPS to determine if your Net Promoter Score is good:

- Benchmarking your NPS is the only way to test which business decisions affect the score. This will let you refine your business strategies.

- It prevents you from overfocusing on the number itself. Instead, you’ll concentrate on acting on customer feedback provided in answers to open-ended NPS questions. Avoiding negative word of mouth from detractors, turning passives into promoters, and turning promoters into brand advocates is the best way to make sure your score keeps growing and meets your KPIs.

- It motivates you not to rest on your laurels after surpassing the average NPS for your industry.

To benchmark your own NPS, it’s best to use reliable survey tools that will let you run NPS surveys, analyze results, and track changes.

For example, Survicate’s report panel allows you to track your NPS over time to see if your score and response rates improve.

To create a sustainable Net Promoter system, you can also integrate Survicate with your CRM, collaboration, and analytics tools. This way, you’ll get more information on your respondents, receive notifications for a quicker follow-up, or combine your survey data with your other data points.

Make sure to check our guide on how to perform a successful NPS analysis.

Outside Factors That Impact Net Promoter Score

No matter which method you use to determine if your Net Promoter Score is good, you always have to take it with a grain of salt.

Many outside factors might impact your Net Promoter Score. Let’s go through a few of them.

Survey methodology

Are you sure you followed survey best practices, avoided survey biases, had a high enough response rate, and chose the right survey channel for your audience?

You never know which methods your competitors use to measure their NPS, so you should only treat their scores as reference points, not strict guidelines.

Before you set out to collect your NPS, make sure you know how to properly run an NPS campaign.

Consistency and frequency

Consistency and frequency are vital to ensure your scores are eligible for benchmarking.

If you run your surveys frequently, you’ll be able to pinpoint which changes might have affected your score.

On the other hand, consistency will help you minimize the impact of outside factors on your results. For example, if you keep switching between mobile or website as the distribution channel for your survey, you’ll never know if the fluctuations in your score are a sign your customer satisfaction program is failing, or maybe that your mobile users are generally less happy with your product.

Industry and niche

As we already discussed above, average scores vary from industry to industry. This is why before you start agonizing over bad results, check if it’s really something you should worry about.

Companies that provide vital, high-stakes services and businesses operating in very competitive niches tend to have lower Net Promoter Scores because their customer bases are more demanding.

Culture?

Cultural bias is a controversial factor. While some, including one of the co-creators of NPS, claim that location should not be taken into consideration while analyzing NPS, some studies show otherwise.

Our recommendation is: if your NPS deviates from the average in one specific country, look for studies on cultural biases in surveys and consider them. But don’t forget to investigate if perhaps your customer experience program isn’t lacking in that market!

How to Get a Better Net Promoter Score?

Now you know how to determine if your Net Promoter Score is good. It’s time to find out how to improve it.

The most important thing is that you put customer feedback at the heart of your company. If you keep reacting to your customers’ insights, removing frictions, and delighting your customers, your score is bound to grow. Your customers are the people who know what using your product is really like. Even the best product owners won’t have the same experience.

But that’s an overwhelming piece of advice, especially if you’re a newbie. So let’s go through a few simple steps to start with. If you’re a Survicate user, you can employ these techniques right away.

And if you want to dig deeper, check out our guide on improving Net Promoter Score.

Segment your respondents for more accurate data

Use your survey data, especially the division of respondents into promoters, passives, and detractors, for more precise customer segmentation. It will let you deliver a personalized experience in all areas of your business, be it product, marketing, or customer service.

Here are a few examples of combining the power of tools that collect customer data with NPS insights, based on the integrations available at Survicate.

- If you’re an Amplitude user, you can correlate your data on product usage and user behavior with NPS survey data. You’ll find out how the satisfied and dissatisfied customers navigate your product and pinpoint your biggest strengths and weaknesses.

For example, if you notice that most of your detractors stop using your product after interacting with a specific feature, check for a correlation. Maybe the feature is bugged or not delivering on its promises? - Are you a marketer who uses Hubspot as your CRM? You can feed your NPS data into Hubspot to understand which of your users are detractors, passives, or promoters. This will allow you to trigger automatic workflows and campaigns based on survey answers. For example, you can run an automated activation campaign for passives or ask your promoters for reviews.

- Survicate’s integration with Intercom lets the customer service teams update their contacts with survey responses to trigger automated campaigns or identify users who gave you specific answers to follow up with them individually.

Check all of our integrations to find the tools you use and get more ideas for customer segmentation.

Close the feedback loop

According to the creators of NPS, closing the feedback loop is an indispensable part of the Net Promoter program.

Let the customers know you heard their feedback. Include open-ended questions in your surveys (such as “What is the reason for your score?” or “What can we do to improve?”) and ask your customers if they’d be interested in receiving a follow-up call or email.

Then, have your customer support contact selected customers that provided the most valuable (or particularly negative) insights. And once you talk to them, make sure you do everything to fix their problems and bring their feedback to life.

Closing the feedback loop is easy if you integrate your survey software with your customer support, CRM, or email automation tool. You can also connect with a collaboration tool such as Slack or Microsoft Teams to receive instant notifications about survey responses. This way, you’ll ensure no feedback falls through the cracks.

Make sure your customer-facing staff is well-versed in NPS

For a Net Promoter system to work, the whole company needs to be 100% on board.

However, your customer support team is the first – and most often, only – point of contact with your organization. This is why you have to make double sure they understand the importance of customer satisfaction and can deliver stellar service. They also need to know your NPS program's ins and outs since they’re the main actors in following up with the respondents and closing the feedback loop.

Ensure you provide your staff with training, education, and the right tools for the job, such as integrations with customer support or collaboration software mentioned above. If you use Survicate, make use of our unlimited seats. Invite your customer support team to the Survicate panel to help them stay on top of surveys and responses.

Summary

So, what is a good Net Promoter Score?

Any score above 0 can be considered good. And if you cross the threshold of 50, you’re among the best of the best.

However, many factors affect Net Promoter Scores, so comparing your results with industry averages will let you make sure your benchmarks are realistic. You’ll also check how much customer experience matters in your niche and determine if you have a competitive advantage.

But the best thing you can do is benchmark your score against your own previous scores. It’s the best way to determine if your customer satisfaction program works, pinpoint your growth drivers, and motivate yourself to improve according to customer feedback.

Whichever method you choose to figure out if your NPS is good, don’t overfocus on the number. Having a high Net Promoter Score is not the end-all, be-all of your NPS program. The important thing is listening to your customers and putting their feedback at the heart of your business.

Dare to ask for feedback and drive your customer loyalty home with Survicate NPS software.

Grab your 10-day free trial and run your first NPS survey now, no strings attached.