SurveyMonkey is a survey and feedback management solution. You can use this tool to create and launch surveys, but also to analyze responses in-app.

The multiple survey types available in this survey tool cater to marketing, market research, user research, employee research, and more. SurveyMonkey is an established player and a common choice for small businesses to enterprises.

But it's not a be-all-end-all feedback solution. In this article, we’ll go through 16 SurveyMonkey alternatives (including free options), so that you can choose the best one for you, dividing them into three categories: business, academic, and personal usage.

Why choose SurveyMonkey?

SurveyMonkey is, among other use cases, a great employee feedback solution. You can use SurveyMonkey to conduct employee satisfaction surveys, boosting productivity and morale.

But you can also use it for measuring customer satisfaction, collecting event feedback, or creating online polls.

The design interface of SurveyMonkey is not the most user-friendly thing in the world, but you get over 230 templates to help speed up your work. You can choose from Net Promoter Score surveys, Customer Satisfaction surveys, Exit Intent, and more.

The editor supports advanced branching and conditional logic and you don’t need to code while at it. You can even randomize answers and add a scoring mechanism or a progress bar.

With this survey platform, you’ll be able to fetch feedback in real-time and take advantage of multilingual features. The SurveyMonkey software integrates with popular third-party tools like Automate.io, HubSpot, and Salesforce.

SurveyMonkey also lets you conduct feedback analysis inside their tool. The AI Genius engine calculates and even estimates the completion rate for live and future surveys for quantitative data. To analyze qualitative insights, use their sentiment analysis and Word Cloud.

Yet SurveyMonkey has its downsides.

Why should you consider SurveyMonkey alternatives?

Some may start to look for an alternative to SurveyMonkey because of its outdated designs or WordPress-settings-like survey creation.

But it’s also often the lack of flexibility on pricing or ready-to-send templates that may make you consider going for a SurveyMonkey alternative.

SurveyMonkey does not offer any free trial. There is a basic free plan with highly limited features. Once you receive over 100 responses, you may have trouble accessing the collected data.

Moreover, Basic Plan (free) users cannot export collected data to Excel.

One user called this “holding your responses hostage until you upgrade the plan.”

Going further, some reviewers are unhappy with the level of customization you can apply to surveys while on the free plan.

Overall, the most useful features are only available on the (steep) paid plans.

Pricing plans are also not optimized in terms of price-to-ratio for the organization’s size. If you are a small to midsize business, you will likely be paying for features you won’t use.

Finally, if you downgrade your plan, you will lose access to the collected data.

Diving into a specific case, one user described their experience in the following way:

- As a student, they were interested in conducting one study with a single question

- They chose SurveyMonkey, as it was advertised as the number 1 free survey tool

- They collected over 100 responses, which means they were forced to purchase a subscription plan to review the results

- They decided not to pay for an expensive subscription plan, forfeiting their results

- As a result, they missed a deadline and decided to stick to a free solution—Google Forms—for the foreseeable future.

SurveyMonkey pricing

SurveyMonkey offers several plans for teams and individuals.

Individual plans range from $40 per month to around $80 per month, while the Team plans start from $30 per user per month.

The plans vary in the number of available responses (for the individual plans) and additional features.

You can also request a demo to purchase an Enterprise plan which is tailored for large organizations and includes enhanced security.

Best SurveyMonkey alternatives: business use

Knowing why you would even consider looking for an alternative to SurveyMonkey, we’ll now go over 16 best options you can go for.

Though, the specific survey software you look for may vary depending on the use case you’re chasing. So, we’ve decided to divide the alternatives presented into three categories: business, academic, and personal usage.

The following six SurveyMonkey alternatives are the best picks for customer feedback, experience, and satisfaction. Therefore, serving anyone looking for more business-related survey responses.

1. Survicate

Survicate is an effortless, yet powerful survey and customer feedback platform. With it, you can easily run NPS, CSAT, and CES surveys, and accurately analyze collected responses.

⭐G2 rating: 4.6

Going for a direct comparison with SurveyMonkey, according to G2, there isn’t a single category where SurveyMonkey surpasses our tool.

We win or match it in ratings for features like:

- Ease of use

- Ease of admin

- Customization/branding

- BI tools integrations

- Quality of support

- Reporting & analytics

- Survey builder

- And more.

With Survicate, you can start building your surveys the moment you sign up. You can start from scratch or use one of 300 ready-made templates, designed for the best response rates. To make it even more effortless, use our AI survey builder that generates accurate surveys in a matter of minutes.

Going further, there is no need to code to get up and running with your surveys.

Survicate offers hundreds of one-click integrations, with popular tools like HubSpot, ActiveCampaign, Mailchimp, and more. To get even more out of collected feedback, you can connect Survicate with Mixpanel or Productboard, to further use your survey results.

Offering more than one seat in our plans, you can invite your team members to collect and analyze insights with you. Like with our interactive filters—you get an exhaustive view of score changes across days, weeks, months, quarters, and years.

Your survey results overview in the Survicate panel will look as clear as day, with accurate data presented, including the date of the last response, the completion rate and time, total and unique views, and more.

Survicate is also a great solution for qualitative data analysis thanks to its newest additions—AI analysis features: Insights Hub and Research Assistant. If analyzing survey data took weeks out of your time, you’ll be pleasantly surprised what these features can do for you.

Survicate free Best plan trial

Price: free

Get all the Best plan features on a 10-day free trial with website, in-product, mobile, email, feedback button, and link surveys available, along with 2500 responses you can collect per month. Test out AI feedback analysis, add custom branding or ending screens, and check out behavior and segmenting targeting.

💡Besides just a free trial, you can also use Survicate for free, using its Free Plan. While on it, you can create your surveys, even using an AI builder, collect up to 25 responses per month, have the CX metrics calculated for you, integrate with other tools, or customize your questions with skip logic.

Good plan

Price: $99 per month

Get up and running with collecting and analyzing customer feedback. The Good plan includes 500 responses per month, 5 team seats, link, email, website, and in-product surveys, an AI survey creator, AI data analysis, and much more.

Better Plan

Price: $149 per month

The Better plan adds more sweetness into the deal with the possibility to remove Survicate's branding, add mobile surveys to your inventory, or explore the advanced targeting options, along with a 1000 responses to collect each month. It also comes with 10 team seats, not forcing you to pay for each user.

Better Than The Rest plan

Price: starting from $299/month

If your needs don’t fall into either of the above plans, the Better Than The Rest plan should be just the right choice. It comes with a custom pool of responses, custom legal and security agreements, custom number of team seats, and more.

2. forms.app

forms.app is a form builder tool that could be a great option for your business. It has an interface that is easy to use and requires no coding knowledge, allowing you to produce forms, surveys, and quizzes simply. Even if you are new to creating these types of documents, the process is straightforward thanks to its user-friendly platform.

⭐G2 rating: 4.5

One of the best things about forms.app is that it offers many advanced features for free. These features include multiple question types, payment collection, advanced analytics, product baskets, signature collection, and conditional logic.

Additionally, forms.app provides an AI form generator feature that enables you to create forms and surveys even faster. All you have to do is describe what you want to create, and the AI will do the rest by suggesting options based on your question titles, saving you time and energy.

It also provides over 3000 customizable templates that you can use to create professional-looking forms and surveys. You can share these documents in various ways, such as embedding them into websites, sharing them as a QR code, or publishing them on social media.

forms.app has a free plan that offers almost every advanced feature so that users can take advantage of it. Users can have one of the paid memberships ranging from 25 USD to 99 USD per month.

3. HubSpot

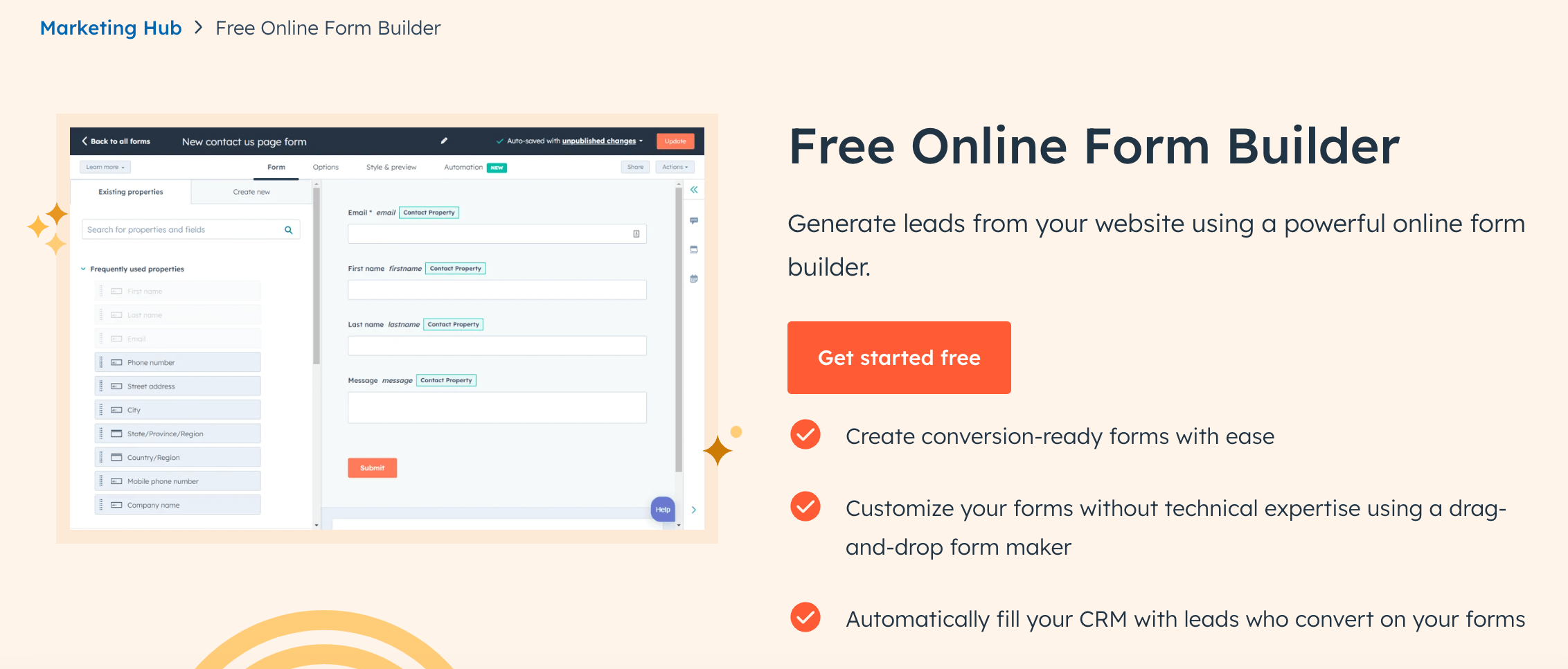

HubSpot's free online form builder is a decent tool designed for businesses seeking simple and intuitive form-building solutions. With its user-friendly interface, HubSpot's tool empowers users to create and deploy customized forms, whether for lead generation, customer feedback, or event registration.

⭐G2 rating: 4.4

Key features:

- Intuitive drag-and-drop interface for form creation and customization, catering to users of all technical backgrounds

- Access to pre-built form templates tailored for various use cases, streamlining the form-building process and saving valuable time

- Integration with HubSpot's CRM and marketing platform, enabling users to capture and manage leads effectively while automating follow-up actions.

- Form analytics and reporting functionalities, allowing users to track form performance and gain insights into user behavior for informed decision-making.

As part of HubSpot's free tier, the form builder is accessible to businesses of all sizes at no additional cost, but the form features on the free plan will be limited.

If you want to go for more functionalities, you’d have to go for the Starter plan that costs $20 per month or dive all in and get the Marketing Hub Professional for $880 a month.

4. Opinion Stage

With the Opinion Stage survey builder, you can easily create professional surveys without any coding. In addition to surveys, this user-friendly tool also allows you to make interactive quizzes, polls, and forms.

⭐G2 rating: 4.8

Opinion Stage uses a one-question-at-a-time format that minimizes respondent fatigue, making the experience feel like a genuine interaction. It also has smart logic features that personalize surveys by adjusting questions based on previous responses.

While SurveyMonkey provides basic customization, Opinion Stage offers complete customization options, including custom CSS and white-label branding. Additionally, there are over 100 templates to get you started and an AI-powered tool that can create surveys, quizzes, and forms in minutes.

Opinion Stage’s surveys are designed for all use cases, including:

- Audience engagement

- Lead collection

- In-depth research.

The platform seamlessly integrates with popular tools like Zapier, HubSpot, Slack, and Mailchimp. It also has advanced visual analytics allowing you to track real-time responses, with email notifications for every submission.

Opinion Stage offers a free plan with unlimited survey creation and up to 25 responses per month. Paid plans start at $25 per month, enabling you to collect up to 1,000 responses.

5. Alchemer

Alchemer, formerly SurveyGizmo, is a survey platform for enterprises. It may not be the best pick if you are a small to midsize business.

⭐G2 rating: 4.4

They offer advanced customization and real-time insight collection. You can use many question types, oftentimes more than their direct competitors.

They support multiple survey types, which makes collecting diverse customer data easy. You can customize branding and set up skip logic and branching as you want.

You can also create mobile in-app surveys if that is how you communicate with your users.

There is a reporting and analysis dashboard, so you can easily go through your collected data, all in one place. Moreover, you can integrate Alchemer with tools like Salesforce and Webhooks.

There are pre-designed survey templates to save you time when starting your campaign.

Compared with SurveyMonkey, surveys are easier to set up, including features like multilingual questions and multimedia within the forms. The tool also has an intuitive CMS and allows you to download result reports in multiple formats.

On the other hand, reviewers complain about confusing sub-menus and the fact that subscription plans are seat-based. So if you want many of your team members to access surveys and results, it may not be the best choice.

Overall, Alchemer offers four plans, from the lowest-tier Collaborator plan starting at $55 per user, per month, to the highest-tier Business Platform plan that comes with a custom pricing.

6. QuestionPro

QuestionPro is noted for its wide range of applications. Customers use QuestionPro to track and manage:

- Customer experience

- The pulse of the customers

- Employee management.

⭐G2 rating: 4.5

The tool offers ready-made survey templates with customizable design themes. Popular integrations with tools like Salesforce and Webhooks are available. QuestionPro is also known for its multilingual options.

In the survey editor itself, you can alter the logic and piping. For better results, you can use advanced filtering and segmentation. To smooth out the data collection process further, you can set up email reminders and scheduling.

QuestionPro advertises the tool as easy to use, with only 5 minutes needed to launch a survey. The free plan gives you access to basic features, such as 30 question types. You can also export data in XLS and CSV.

Paid plans start from $83 per month, billed annually. Access to QuestionPro's Research, CX and Workplace suites is available separately, at an extra cost.

7. SoGoLytics

SoGoLytics is a feedback management software with two prominent features: SoGoCX and SoGoEX. The aim of SoGoCX is to boost customer experience, while SoGoEX focuses mostly on boosting customer engagement.

⭐G2 rating: 4.6

Use SoGoLytics to create custom surveys, polls, and quizzes for your customers. As with the competitors described above, you can use survey templates and integrate them with tools like Salesforce, Zapier, and Google Analytics.

The survey editor works on a drag-and-drop basis, making the learning curve practically non-existent. You can also get response alerts to stay on top of your real-time feedback.

Moreover, you can automate your feedback collection with their API. Add branching and skip logic to optimize the results you get. In terms of analysis, there are data visualizations that you can use in meetings and presentations.

But the solution isn’t perfect. The basic, free version has limited features, and reviewers complain about server and programming-related issues.

When it comes to the paid plans, they come in two versions, either dedicated to individual users and small teams or whole businesses and enterprises. The individual plans start at $25 per month, while all three plans dedicated to enterprises require a custom quote.

Best SurveyMonkey alternatives: academic research

If you’re all about finding an alternative to SurveyMonkey for academic research specifically, you’re in the right place.

The following section of the article covers the best SurveyMonkey alternatives for academic use, i.e., for students, professors, and researchers.

8. Qualtrics

Qualtrics is an all-in-one survey tool loaded with many functionalities, especially targeting healthcare, government, financial services, and education-related industries.

⭐G2 rating: 4.4

The bank of pre-built templates in this alternative to SurveyMonkey includes interesting surveys, such as:

- Vote and rank survey

- Employee suggestion box

- Meeting feedback

- Quick poll

- And more.

Qualtrics Experience Management (XM) platform is a dedicated solution for both market and academic research, with a particular focus on improving the experience of staff and students.

To further ease the process of getting feedback from teachers and students, you get to use Qualtrics’ research-backed survey templates.

A few things from survey creation itself is its drag-and-drop editor or the predictive intelligence tool that will help you obtain actionable insights from the data you collect.

The tool will also recommend survey designs while you edit your questions. You can integrate it with Adobe, Marketo, Salesforce, and more.

30 types of graphs make data visualization quite easy, including crosstab capabilities. You can export all of this in multiple formats, including popular solutions like CSV and PDF.

With direct comparison to SurveyMonkey, Qualtrics is a better choice if you want highly customized surveys. In turn, reviewers complain about the complexity of adding contract data to directories.

When it comes to the costs — Qualtrics’ pricing is unfortunately available solely on request, which makes the decision process even harder. The pricing being not transparent has been noted by Qualtrics customers on G2.

9. LimeSurvey

LimeSurvey is an open-source survey tool, which means you can modify the software according to your individual needs. It is free to use with a few features, and the paid plans are a bit cheaper than those of SurveyMonkey.

⭐G2 rating: n/a

LimeSurvey offers a question library to create accurate surveys quickly. You can host your survey in many ways, including the option of self-hosting. The tool specializes in market research, but also lists academic research and course evaluation as the main use cases.

As opposed to Qualtrics, which focused more on the experience of students and academic staff, LimeSurvey serves more the purpose of doing actual academic research—whether you’re in a collaborative project or writing a dissertation.

If you’re heavy on customization, you can create white-label surveys and add your own branding in LimeSurvey. Advanced targeting lets you host surveys on custom URLs. But the software also supports rich media, branching, and skip logic.

The user interface is very responsive, and reviewers like the navigation. When it comes to analyzing the results, you can do so using graphs, bards, and charts.

On the downside, email handling is limited, and it may be difficult to train your employees or teammates due to the steep learning curve. The tool may also be suboptimal for individual use.

Paid plans start at $34 per month, making LimeSurvey a little bit cheaper than SurveyMonkey.

10. SmartSurvey

SmartSurvey is again a software dedicated specifically to government, education, healthcare, non-profit, and hospitality industries.

⭐G2 rating: 4.6

For academic purposes specifically, SmartSurvey offers over 130 templates, an AI survey builder, all question types, results exporting, and charts and drafts for analyzing the results.

For more advanced features, SmartSurvey customers complain that many essential features are locked behind a paywall or expensive subscription plans. But, if you do decide to invest, you can take advantage of highly advanced question types and logic in your surveys.

Moreover, the SmartSurvey Enterprise plans include out-of-the-box customer experience questions, dashboards, and complex variable options. You can add multimedia like images and videos to your surveys and translate the questions into many languages.

On the other hand, the free version will not let you filter, or export collected data into formats other than Word and PDF. White-label surveys are only available on the Enterprise plan. The same goes for survey customization.

No credit card is needed for the free plan, but you can only have one named account user.

The free version also comes with unlimited surveys, but they can only have up to 15 questions, and you can collect up to 100 responses per month.

Paid plans start at £30 per month with unlimited responses on the annual plan or 1000 responses on the monthly plan. There is a rolling subscription, but reviewers claim it is easy to cancel, unlike SurveyMonkey.

A special deal for students specifically is the possibility of purchasing SmartSurvey for the equivalent of £7.50 per month when going for annual billing.

11. SurveySparrow

SurveySparrow is a conversational survey builder. With it, you can make visually attractive surveys with video snippets as survey backgrounds, which can be muted or set up to play on loop.

⭐G2 rating: 4.4

Again, SurveySparrow is one of the best SurveyMonkey alternatives for academic use specifically, since they target the education industry. Just as with Qualtrics, SurevySparrow gives you a way to create and distribute surveys that aim to amp up the student satisfaction.

Going further, the tool offers many analysis options, with better reviews than that of SurveyMonkey. Advanced filtering options make it easy to sort responses, which you can view on graphs and charts.

If you want to take SurveySparrow for a test drive, you can go for its 14-day free trial.

But to get information on the paid plans, you need to fill out a form with your name, business email, and a phone number. If not, the pricing details are censored with asterisks—very secretive of SurveySparrow.

Best SurveyMonkey alternatives: personal use

If you are looking for free SurveyMonkey alternatives—especially for personal use—consider the following four.

12. Google Forms

Google Forms is a part of Google Suite—it’s free and comes with native integrations to Sheets, Drive, and more.

⭐G2 rating: 4.6

With Google Forms, you can share your forms and surveys online via links. This tool records responses in real time and supports collaboration between team members to analyze feedback results.

The smart validation feature ensures accurate formatting in emails and other distribution methods.

Google Forms is the most popular free SurveyMonkey alternative. It supports branching and skip logic, and works on many devices like tablets, smartphones, and desktops.

What’s pretty convenient about this SurveyMonkey alternative is that all edits you make to surveys are saved automatically in your Google Cloud.

On the downside, Google Forms may look less professional than SurveyMonkey, the distribution methods are limited, and there are no on-site, in-app pop-up capabilities.

13. Typeform

Typeform is a good choice for personal use as it specializes in forms like quizzes. On the other end of things, this tool is not the best pick if you want to collect extensive or complex data.

⭐G2 rating: 4.5

You and your respondents can use keyboard shortcuts to get through the forms faster. You can add images and videos, and the forms will be compatible with all browsers. Typeform also offers on-site and in-app surveys.

💡If you want to quiz your friends on fun topics—Typeform is a great solution.

On the downside, even though there are pre-designed survey templates, they are hard to customize. There is also no static view, all questions are displayed one by one.

You can integrate Typeform with popular tools like Canva, Trello, and HubSpot.

However, there is a rigid payment structure and a security issue—all forms show up on the Typeform website, making them available to spam and bots.

Choose a Typeform alternative if you want to collect many responses or payment data.

As for the costs, Typeform offers a total of eight plans, with the main division being between the Core and the Growth plans. The Core plans start at 25 EUR per month, while the Growth plans start at 179 EUR per month.

But there is also a free plan available, in place of the free trial.

14. SurveyPlanet

SurveyPlanet is yet another free SurveyMonkey alternative. With it, you can create unlimited surveys with unlimited questions and collect unlimited responses.

⭐G2 rating: 4.1

It’s a pretty good solution for basic surveys.

The focus here is on sharing free surveys quickly. The design is responsive, and the results review is easy and intuitive, albeit limited when it comes to filtering and sorting.

If you are a new business interested in product research in the ideation phase, you can take advantage of responses from a pre-screened pool of qualified participants SurveyPlanet offers.

The free version comes with an exhaustive-enough set of features to get you started with your surveys.

There are also paid plans available, if you’re looking for more advanced functionalities. Pro plans start at $20 per month or $180 per year, while the Enterprise plan is $350 per year—making SurveyPlanet a quite affordable solution.

15. Survio

When you first start creating a survey with Survio, you’ll get to peruse their template library—and there are many categories and options to choose from. Each template comes with a theme for a professional look.

⭐G2 rating: 4.4

Survio offers integrations through Zapier, meaning you can connect it to thousands of tools. This survey software is particularly recommended for HR specialists.

They advertise how easy it is to:

- Screen potential candidates

- Analyze employee feedback

- Assess employee performance

- Carry out exit surveys.

When it comes to Survio’s limitations, data analysis is very limited on the free version of the tool. If you don’t purchase a paid plan, you can only collect 100 responses.

For paid plans, you have four options to go for. From the Mini plan that will cost you about 15$ per month to the Pro Business plan that comes for 80$ a month. If your needs extend beyond anything the regular plans offer, you can also go for a custom-made plan.

16. SurveyLegend

Last but certainly not least—SurveyLegend. This tool is a pretty intuitive option. To create a new survey, you simply pick the type of question you want to include on the left and drag it onto your survey preview on the right.

⭐G2 rating: 4.4

On the downside, you may hit a paywall when adding more complex options. You will, however, be able to add images to questions and take advantage of live analytics.

Another two-way street is the fact that it’s easy to duplicate surveys, but this feature is only available on the paid plans.

In turn, SurveyLegend is great if you want to share your forms via email or SMS, as well as social media channels like Facebook, Twitter, or blogs.

The limitations on the free plan are heavily visible, though. The free version allows only three surveys with up to six pictures, and you won’t be able to export data. You can use one conditional logic element, and the surveys will have ads and include watermarks.

Paid plans start at $19 with up to 20 surveys and 30 pictures. With them, you can export up to 1000 responses per survey and use 10 conditional logic elements.

What’s worth noting is that your surveys will be watermarked on every plan, besides just the highest-tier Legendary plan.

Launch your surveys with Survicate

The 16 survey tools described above are the most popular SurveyMonkey alternatives out there. As every tool though, they have their pros and cons, and some fit specific categories better than others.

We hope this breakdown of the best SurveyMonkey alternatives for business, academic, and personal usage has made it easier for you to reach a decision on which software will meet your needs first.

And if not, make sure to play around with the free versions and trials of these tools yourself.

With Survicate, you get access to all Best plan features on the 10-day free trial, so there's no excuse not to try it out! Happy feedback collecting!

Frequently asked questions: 16 best SurveyMonkey alternatives

Why look for a SurveyMonkey alternative?

SurveyMonkey is a quite popular survey software, yet it has its downsides. You may start to look for an alternative because of its inflexible pricing, like its heavily limited free plan or the fact that it's more catered to bigger organizations. Some users also noted its lack of customization options or the inability to export data while being on the free plan as their pain points.

What are the best SurveyMonkey alternatives for business use?

The best SurveyMonkey alternatives for business use are for example Survicate, if you want to go for a modern customer feedback platform or QuestionPro if you’re all about employee management. Other options include: forms.app, HubSpot’s form builder, Opinion Stage, Alchemer, and SoGoLytics.

What are the best SurveyMonkey alternatives for academic research?

Academic researchers on the other hand may try their luck with Qualtrics, LimeSurvey, Smartsurvey, or SurveySparrow. If you’re looking to improve the satisfaction of your students with those course reviews and feedback, test out Qualtrics and SurveySparrow. If you’re after academic research, try out LimeSurvey and SmartSurvey to see which one fits you better.

What are the best SurveyMonkey alternatives for personal use?

The most popular SurveyMonkey alternative for personal use specifically is Google Forms. It’s available for free, right in the Google Suit, so all you need is a Google account. But other options include Typeform, SurveyPlanet, Survio, and SurveyLegend, if Google Forms is not your cup of tea.

What are the best free SurveyMonkey alternatives?

There are quite a few free SurveyMonkey alternatives, even including advanced customer feedback platforms, like Survicate. Many SaaS tools offer a free-of-charge plan, some even in place of a free trial, like Typeform. But the best free SurveyMonkey alternative would have to be our own tool—Survicate. Simply because it gives you 3 team seats, all survey types, an AI survey builder, and up to 25 responses per month, completely for free.