Tl;dr;

- Leverage direct feedback: VoC feedback provides direct insights from individual customers rather than aggregated data, enabling personalized responses and improvements to the customer experience.

- Close the feedback loop: Inform customers when their feedback has led to changes, enhancing their experience and fostering a sense of being valued and heard.

- Structured approach is key: Implementing a structured VoC program with clear objectives and diverse customer data collection methods, including surveys and interviews, ensures consistency and actionable insights.

- Use surveys for competitive analysis: Running online VoC surveys can reveal customer preferences and areas for improvement, providing competitive intelligence by comparing feedback across industry standards.

- Continuous measurement and improvement: Regularly measure customer satisfaction metrics and make iterative improvements based on VoC feedback to maintain alignment with customer needs and expectations.

Who is the number one person you should turn to to grow your business? If your answer is anything but “my customers,” you should reconsider it.

In an age where discovering your competitor is one Google search away, your customers are your business's most precious asset and the best source of information for improving it.

And if you’re interested in acquiring more customers as well as making the existing ones happier, you have to collect voice of customer feedback.

Today, we’ll explain VoC feedback, how to collect it, and, most importantly, how to use it.

What is Voice of the Customer feedback?

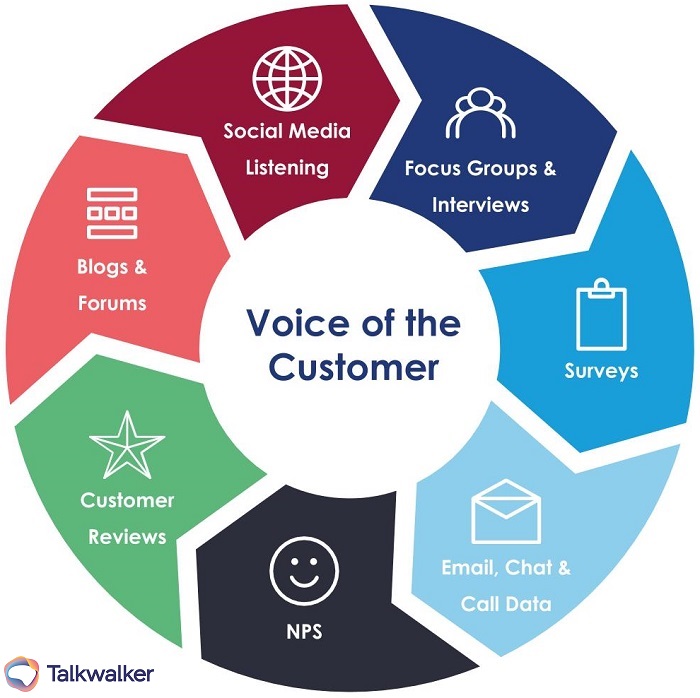

VoC feedback is any type of feedback you can collect by actively listening to your customers and their needs. It can be gathered across different platforms and touchpoints by various departments in your company.

You may think this is just your regular customer feedback and wonder what the difference is. What makes Voice of the Customer feedback different is that it focuses on the individual customer rather than trying to lump all responses together in aggregated data sets.

One of the most important aspects of this type of feedback lies in the increased focus on closing the feedback loop. In other words, once a customer leaves their feedback and you make changes based on their input, you let them know about this.

As a result, all of your customer experience metrics improve on global and more granular levels.

Now, let’s look at some practical examples of collecting this specific type of feedback.

Voice of the Customer program structure

To establish a robust Voice of the Customer (VoC) program, you need to follow a structured approach that includes several key components:

- Objective definition: Clearly define the goals of your VoC program. Are you trying to improve product quality, enhance customer service, or identify opportunities for innovation? Your objectives will guide the structure and implementation of the program.

- Customer data collection: Gather feedback using various VoC tools and methodologies such as:

- Surveys: Deploy short and focused VoC surveys to collect quantitative and qualitative data.

- Customer interviews: Conduct one-on-one conversations for in-depth insights.

- Analytics: Employ VoC analytics to mine and interpret data from customer interactions.

- Cross-functional involvement: Ensure all departments, from product development to sales, understand their role in the program. This fosters a company-wide culture that values customer feedback.

- Action plan: Develop a system for immediate response to feedback, incorporating a closed-loop process. This will ensure that feedback is not only collected but also acted upon.

- Continuous improvement: Review feedback trends and program performance regularly to refine methods and strategies.

A structured VoC template can guide your data collection and analysis, ensuring consistency and clarity in the insights gathered. Remember, an effective VoC program is cyclical and iterative, adapting over time to the evolving needs of both your business and your customers.

How to collect Voice of the Customer feedback?

Collecting feedback through Voc strategies enables you to understand your customer's experiences and expectations comprehensively. You can pinpoint customer preferences and areas that may require improvement by employing specific methodologies, such as surveys and research.

Voice of Customer surveys

You use VoC surveys to acquire direct feedback from your customers on various aspects of your product or service. Key steps include:

- Defining objectives: Determine what you want to learn from your customers, such as product feature enhancement, service quality, or overall satisfaction.

- Designing the survey: Craft clear and concise questions to maximize response rates and the accuracy of the information collected.

- Distribution: Choose the right channels to reach your customers, whether it's email, social media, or in-app prompts.

- VoC analysis process: Utilize software tools to interpret the VoC data and discern actionable insights.

Voice of the Customer research

VoC research goes beyond surveys, involving a more in-depth approach to understanding customer sentiment:

- Qualitative research: Engage in conversations, interviews, and focus groups. This allows for a deeper exploration of customer motivations and pain points.

- Analytics: Employ advanced analytics to evaluate customer feedback on a granular level, identifying trends and patterns within the unstructured data.

- Integration: Correlate VoC data with other customer data points using Customer Relationship Management (CRM) systems to gain a 360-degree view of the customer experience.

Voice of Customer analytics

Proper VoC analysis and its effective application are critical in shaping customer-centric business strategies. Your goal is to glean insights from analytics and apply methodologies that directly feed into product enhancements and customer satisfaction.

VoC Analytics involves quantifying qualitative data to translate customer feedback into actionable insights. This process usually includes:

- Categorization: Sorting the feedback into themes like usability, cost, or customer service quality.

- Sentiment analysis: Determining whether customer feedback is positive, negative, or neutral.

- Trend tracking: Observing changes in feedback over time to detect emerging issues or improvements.

By employing specialized software, you can automate the analysis of large volumes of feedback, swiftly identify key drivers of customer satisfaction, and quantify their potential impact on customer loyalty as well as business outcomes.

Voice of Customer tools

When you aim to understand your customers, VoC tools are indispensable. They facilitate the gathering, analyzing, and acting upon valuable feedback.

When selecting a tool, consider these features:

- Ease of use: Ensures you can set up and manage without excessive training.

- Integration: Look for tools that integrate with your existing systems.

- Analytics: Strong analytical features will help you make sense of complex data.

- Feedback collection: The ability to collect feedback through various channels is vital.

Here's a concise overview of what to consider:

Survicate

Survicate is versatile feedback management software that helps you gather customer insights through targeted surveys distributed via email, links, websites, products, and mobile apps.

With AI features, such as an AI survey creator or open-text analysis and categorization, Survicate considerably speeds up the discovery of valuable insights. With real-time data analysis, you can easily keep up with incoming feedback.

HubSpot

Known for its comprehensive CRM capabilities, HubSpot helps you integrate the customer feedback you collect, with for example Survicate, into the customer journey. Bonus: you can connect these two to tools with a native Hubspot integration for Survicate.

Brand24

Brand24 helps you track and analyze online conversations about their brands, products, or industry across various platforms in real-time. It is particularly useful for monitoring brand mentions and gaining insights into customer opinions and trends.

Voice of Customer templates

Incorporating the Voice of Customer into your business strategy is crucial for understanding and fulfilling customer needs. Efficient VoC programs are supported by robust templates and real-world examples, helping to streamline feedback collection and analysis.

When designing a VoC program, templates are indispensable for consistently organizing and interpreting customer feedback. Here are two critical types:

Kano Model template

This divides features into five categories (Must-be, One-dimensional, Attractive, Indifferent, and Reverse) based on how they affect customer satisfaction. Use this to prioritize which features to develop or improve.

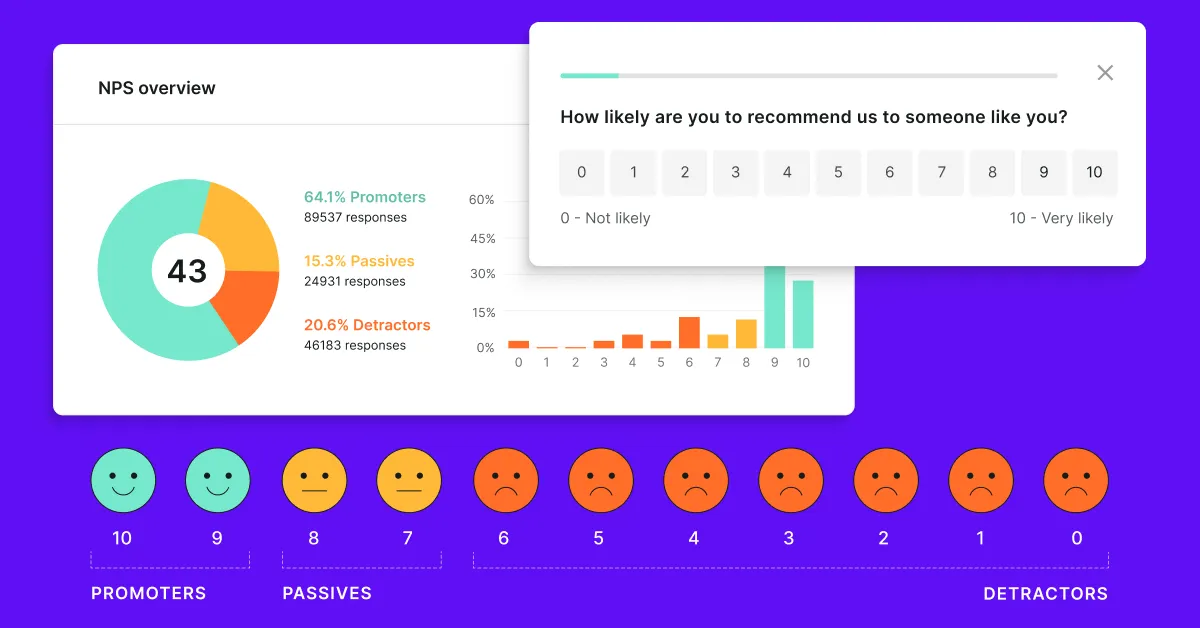

Net Promoter Score (NPS) template

NPS categorizes customers into Promoters, Passives, or Detractors. It gauges loyalty and overall satisfaction by asking how likely they are to recommend your brand to others.

Voice of the Customer best practices

Certain best practices can be instrumental to elevate your Voice of the Customer (VoC) program. These strategies are tailored to transform customer feedback into actionable insights, ensuring your business stays aligned with customer needs and expectations.

- Set measurable objectives: Begin with clear, quantifiable goals for your VoC program. This focus helps steer the collection, analysis, and application of customer feedback towards improving specific aspects of your product or service.

- Gather diverse feedback: To collect comprehensive feedback, use a variety of VoC tools, such as surveys, interviews, and focus groups. Diversify the channels you collect information to encompass a broader range of customer perspectives.

- Implement a structured approach: Establish a well-defined VoC methodology to systematize managing the customer feedback. This structured approach ensures consistency and reliability in understanding customer sentiments.

- Leverage VoC analytics: Apply VoC analytics to dissect and interpret the data. Look for patterns and trends that reveal underlying customer issues or desires, translating insights into tangible business improvements.

- Act on feedback swiftly: Responsiveness is key. Quickly addressing the feedback received resolves customer concerns and demonstrates your commitment to their satisfaction.

- Continuous improvement: Treat your VoC program as a dynamic business component. Review and refine your strategy regularly to adapt to new customer trends and feedback.

- Measure impact: Validate the effectiveness of actions taken based on VoC data by measuring their impact on customer satisfaction and business outcomes.

Remember, your VoC program is more than a set of activities; it's a strategic tool to ensure your business can genuinely hear and respond to the voice of your customers.

What to do with Voice of the Customer feedback

If your data is in, but you have no idea what to do with it, worry not. Here are some ideas on best using the VoC feedback.

1. Close the feedback loop

The ultimate way to improve your customers’ experience is to let them know you have implemented their feedback. Thanks to modern feedback tools combined with CRMs and help desk apps, you can easily create a system where each customer who leaves feedback gets a notification when it’s implemented.

This lets your customers know that you’re actively listening to them and taking action on their feedback. At the same time, it gives a more personalized experience, even though you can automate the entire operation with a few apps.

2. Measure for improvements

One of the beautiful things about collecting quantitative feedback is that it gives you a good basis for comparison. For example, measuring your CSAT score in the first and fourth quarters of the year can give you good insights into how your customer satisfaction has improved over time.

However, it’s easy to make the mistake of running NPS, CSAT, or CES surveys only once in a while because you need to catch up with customers’ sentiments quickly.

To get accurate, meaningful results, you need to ensure that you’re surveying for the right metrics with the right customers. And not just once but throughout the year and at different customer touchpoints.

Tools such as Survicate allow you to do this, as all the feedback is stored in a central hub. Each of your customers is assigned their own data set, and you can easily compare their customer experience metrics over time, be it NPS, CSAT, CES, or some other type of qualitative survey.

3. Assign stakeholders

With the right feedback, you’ll know whether something in your customer experience journey needs improvement. Once you have the VoC feedback handy, it’s time to assign each piece to a relevant person or team in your company so they can start working on it.

For example, if customers require a new feature, create a task for your product and development team and then put the improvement on your roadmap. You can follow a similar process if a bug needs fixing. If your customer support needs improvement (such as a better first response time), you can start by finding the bottleneck and assigning it to the right person.

Manage your VoC feedback program with Survicate

Gathering feedback is getting more important each year. As brands have a harder time differentiating themselves with features, competing based on fantastic customer experience will become one of the key ways to stand out against tough competitors.

To start collecting feedback quickly and effectively, why not try Survicate? With the AI survey creator and different platforms you can use to distribute your surveys, Survicate has something for any business looking to collect VoC feedback. Sign up for your free trial today!