No matter what industry you are in, the average conversion rate for landing pages hovers around 2%. Unless your landing page is achieving top results—then you can count in a conversion rate of even above 5%. But let's stick to the average 2%.

If you have a page that gets 10,000 views, for example, that means that you can expect 200 conversions from it.

A solution to get more conversions many go for is to simply increase the website traffic. After all, more traffic equals more conversions, right?

But what if instead of growing your traffic, you could improve your conversions instead? A relatively small increase, let's say to 3%, could mean an extra 100 paying customers, every month. But where do you even get started?

Conversion rate optimization is one of the most important branches of marketing and today, we’re giving you 14 of the very best tools you can use to increase your conversions and get more revenue from your existing traffic.

.webp)

Survicate

Ever wanted to find out what’s stopping your customers from converting? Have you tried actually asking them?

There could be a number of things wrong with your website, including the design, the copy, the offer or something else. Use a customer feedback platform, like Survicate, and run a survey to find out what your customers think about any of these things.

Wondering what to ask? There is so much to choose from.

Survicate helps you get inspired by offering hundreds of professional, ready-to-use survey templates, like:

- Product market fit surveys

- Brand name testing surveys

- Consumer preference survey

- Content rating survey

- Free-to-paid conversion rate survey template

But you can also check out 21 questions for customer satisfaction, including asking about customers' satisfaction from navigating your website, and these 17 user feedback questions for further inspiration.

Once you decide on the exact questions and the answers you need to get to improve the conversions, create your surveys. You'll need just a few minutes, either working on one from scratch, using an AI survey builder to speed things up, or using one of the many templates and ready-to-use questions from the library.

Then, you can launch Survicate surveys in many different ways, starting from your website, mobile app, email, and more—essentially, allowing you to work across channels.

💡 Karolina Brach, our expert at conversion rate optimization says that her favorite tip for optimizing website conversion using surveys is actually sharing special deals and offers via website survey pop-ups triggered on specific landing pages. That way, she can present an enticing message to a dedicated audience while retargeting them to another URL, creating a logical journey for said users. If a user is interested in a given landing page—they should be quite receptive to a special deal too.

Now, obviously, just asking the right questions or distributing your surveys is not enough to optimize conversion rates.

With Survicate, you can easily analyze the collected answers, even if they account for hundreds and thousands of responses. Thanks to its advanced AI features that categorize the collected feedback for you, you'll be met with automatically extracted insights, analyzed sentiment, and customizable dashboards.

What's more, Survicate also integrates natively with dozens of tools to help you combine the qualitative feedback collected using surveys with your favorite analytics tools, like Google Analytics or Mixpanel and really get the why behind customers' answers. To further strike better conversions, you can also integrate Survicate with a customer communication tool of choice, like Braze or your favorite CRM, like HubSpot, to fully personalize the campaigns and messaging sent to customers.

Survicate is a perfect fit for any company and any stage of the sales funnel. For a surefire way to skyrocket your conversions, start with the “why”—and use Survicate. Go for a 10-day free trial and start asking.

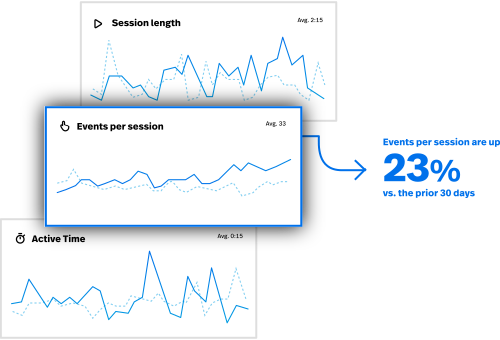

FullStory

Just created a page and you have no idea why it’s not converting? You could look into Google Analytics and the goals you set up, but that only gives you the raw data. Sometimes, you need a bit more information to paint the full picture, and this is where FullStory comes into play.

Using this app, you can get access to session recordings from your website users so you can see what is causing them to bounce rather than convert. If you want to get more global insights, tap into user heatmaps to see which parts of your pages get the most engagement.

FullStory also gives you something called Frustration Signals—signs that something is seriously wrong with your content and that your visitors are not liking it. You can also track funnels and conversions within the app and track your progress in detailed dashboards.

Unfortunately, FullStory does not list their pricing on their website, requiring users to get in touch with the sales team by booking a demo.

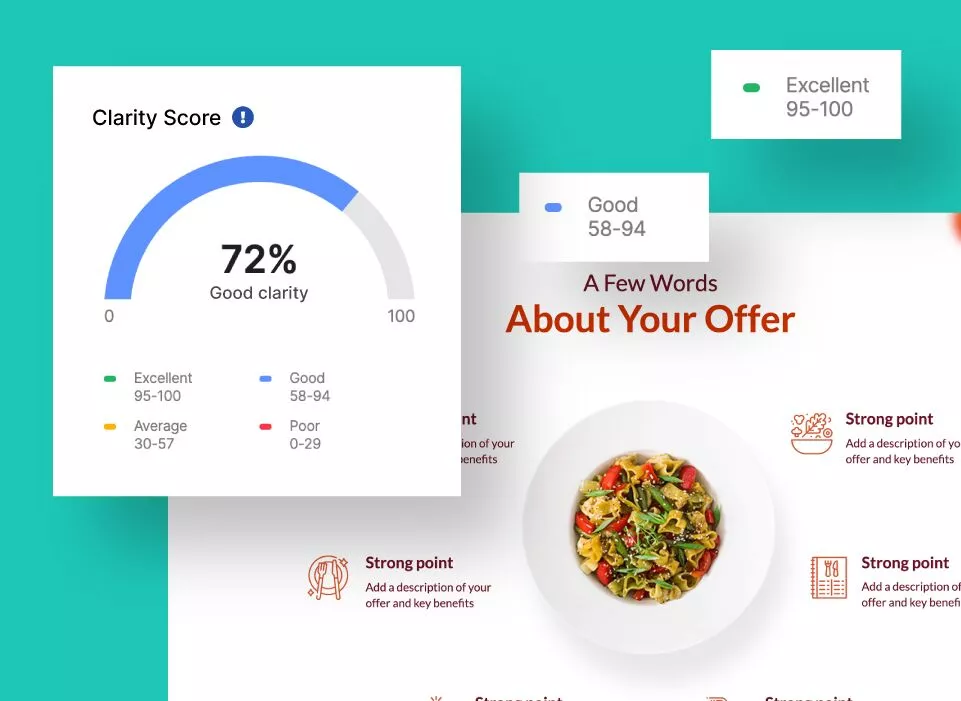

Landingi

Landing pages are those pages on your website which are meant to drive conversions, among other things. Using Landingi, you can create beautiful landing pages in their drag-and-drop editor and make sure that you’re one step closer to the sale—at least in terms of design.

But this tool has an extra trick up its sleeve as well. There is an AI tool within Landingi called PageInsider. Just take your freshly built Landingi landing page and run it through PageInsider and you’ll get a quick rundown of what you need to fix.

There are three main aspects of this tool. One, it will show you the clarity of the page in percentage, so you know how easy it is to understand the content. Two, it shows you the places where customers will pay the most attention. Last but not least, it shows whether your CTAs will be effective or not, all based on AI.

While not CRO expert, this tool can greatly help in guiding you in the right direction. It comes in any paid plan as an addition that costs $5, which is immense value for money. The cheapest Landingi paid plan starts at $29, however, you can publish one landing page for free.

Optimizely

This is an enterprise customer experience tool that can do many things and conversion rate optimization is just one of them. Within the Intelligence Cloud part of the app, you can use Web Experimentation to unearth precious feedback about your CRO efforts.

Even though it sounds really complex, Optimizely boils down to a simple A/B testing solution that is well integrated into a larger offering. You can take any aspect of your website, such as copy, design, placement or something else and run it in Optimizely to see what performs better with your target audience.

💡 Survicate integrates with Optimizely as well, allowing you to run surveys targeted at specific experiment variants you're running in Optimizely, to best compare what users think of each option and get the 'why' behind the A/B tests.

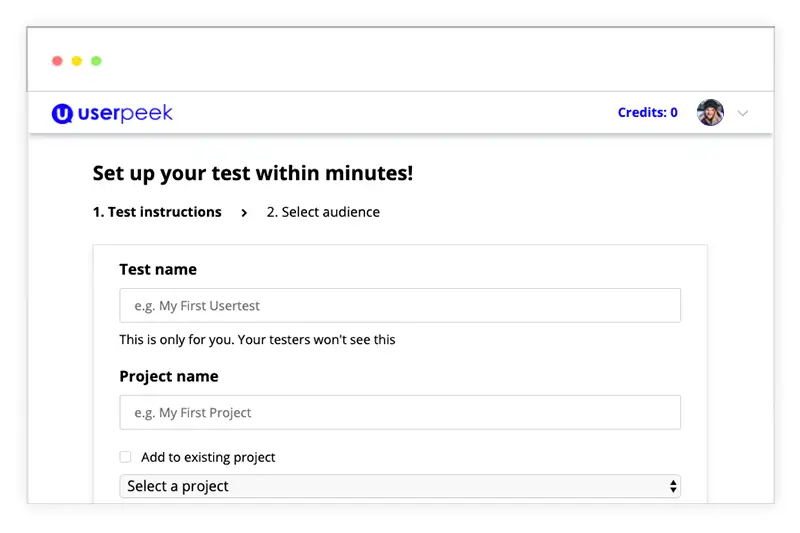

UserPeek

If you’re sitting behind a screen, scratching your head and wondering why a page is not converting, it’s time to stop. With UserPeek, you can talk to an actual human being and see what they think about it.

Similar to UserTesting, probably the most popular product in this niche, UserPeek lets you send a page to a live group of people and get their feedback. You can get insights from people in real time, recorded on video, as they’re browsing your landing page.

The most affordable plan is $55 per each added tester. Although it’s not the cheapest tool on the list, the findings you get this way can be priceless.

Braze

Engaging customers through on-point communication remains a huge part of increasing the conversion rates. And this is where Braze comes into play. Braze is a customer engagement platform that helps you reach customers with personalized messaging across channels.

From emails to SMS and even WhatApp messages—with Braze, you'll set up exactly the messages you want to reach your customers with. Let's say you've introduced a special deal that can help improve your conversions as good as ever. You can easily let your customers know through various channels with Braze.

The best part?

Braze also integrates with Survicate, helping you personalize those customer comms like never before—based on real customer feedback collected via surveys. You can use survey responses to also automatically trigger workflows directly in Braze to help you launch the now-perfect campaigns even faster.

.webp)

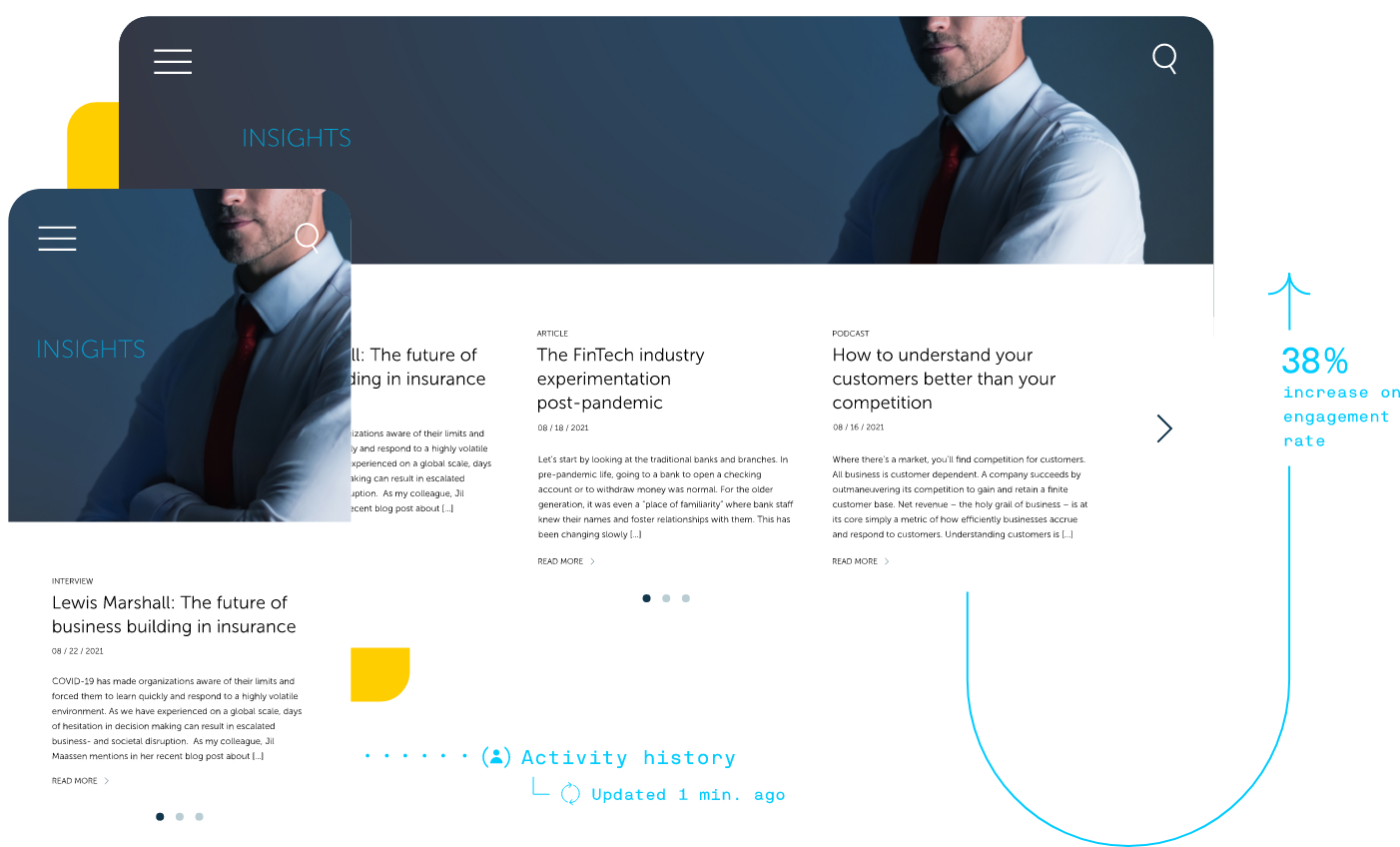

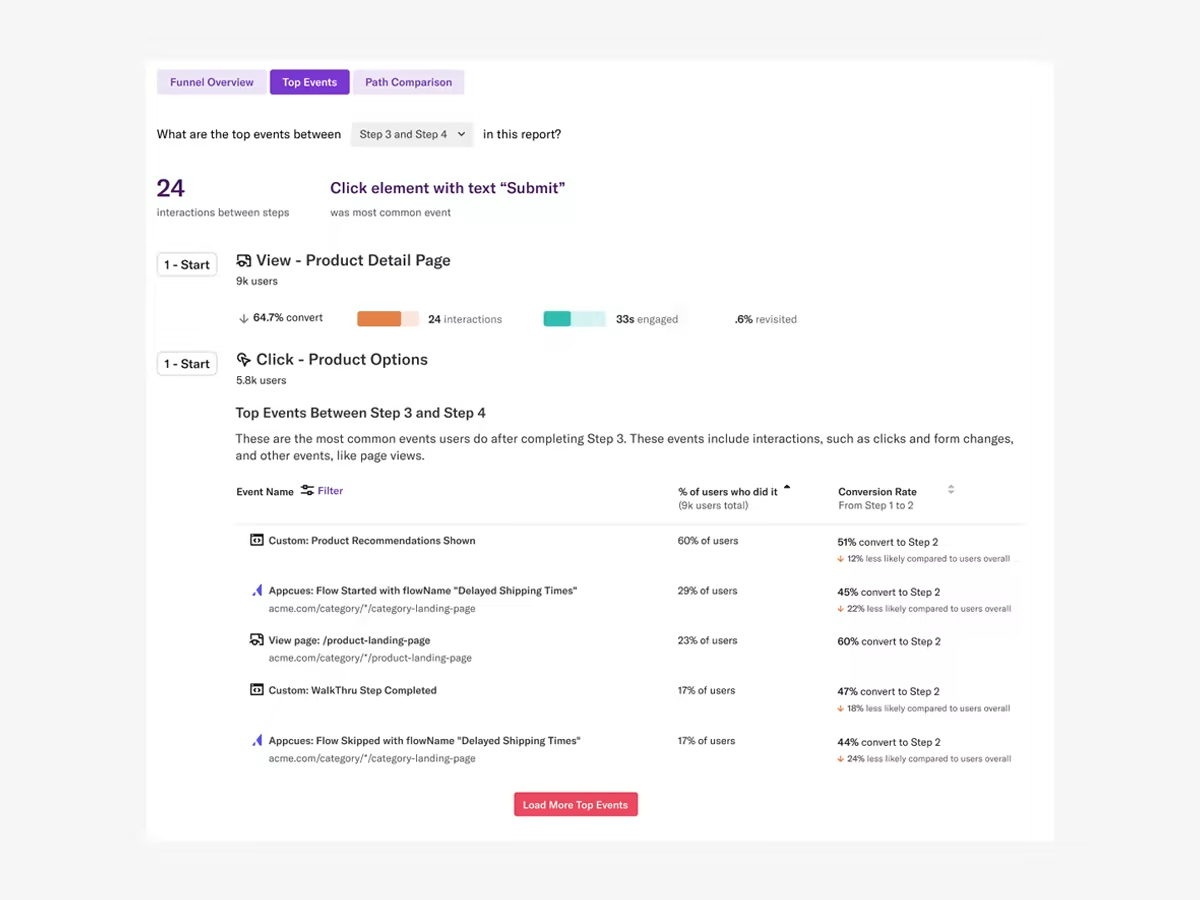

Heap

Finding out what customers do on your website is the first step to increasing your conversions. Similar to the previously mentioned FullStory, Heap shows you the data behind every action performed on your website.

Session replay is the best-known aspect of Heap, doing precisely what it claims—allowing you to replay user sessions. Illuminate is the second-best feature of the app, and it shows you the exact points on your website pages and funnels where customers drop off or show friction.

Based on their behavior on your website, you can also segment your customers and show their activity through detailed dashboards. Unfortunately, Heap does not provide its pricing publicly so you’ll have to get in touch to get a quote for your specific needs. However, there is a free plan available.

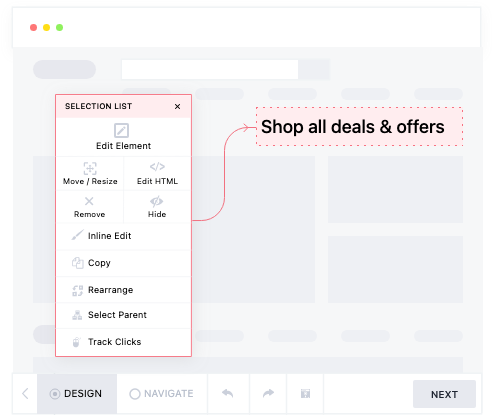

VWO testing

Split testing is easier said than done. And when you want it to be easy, you can use VWO and their product called VWO Testing. You can use it to split test any aspect of your website, down to a single line of code. And the beauty of it is that you don’t need to know how to write a single line of code in the first place.

VWO Testing uses an intuitive visual editor that lets you drag and drop and click around to change different aspects of your website. This lets you run a series of different split tests of different versions of the same page to check and see which one performs best.

Much like the competition, VWO Testing is intended for enterprise audiences with deep pockets, with the paid plans starting just over $300 per month. However, there is a free plan you can try out.

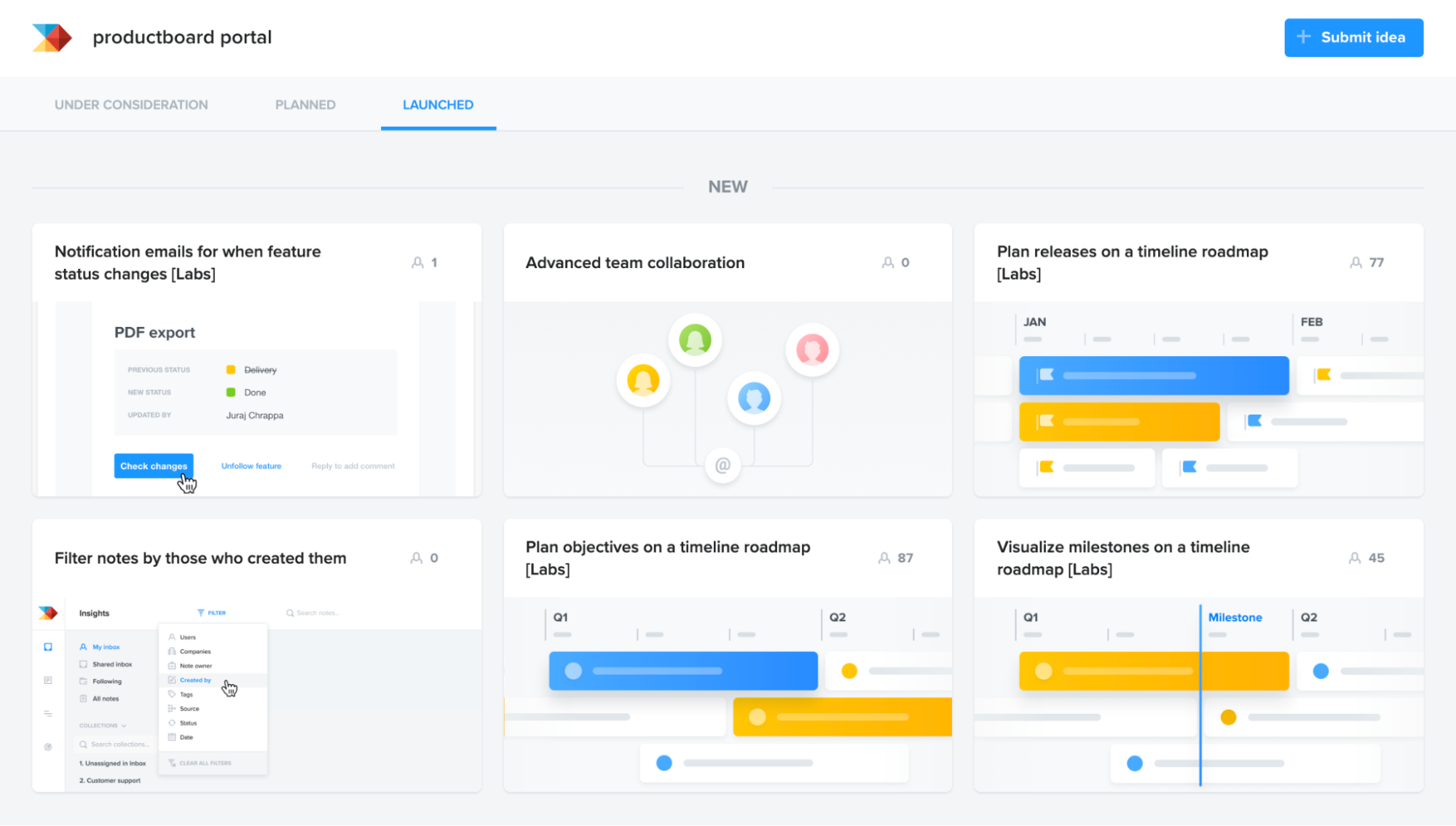

ProductBoard

If you already have a pool of engaged customers using your SaaS product, you’ll benefit from using ProductBoard. One of the heavyweights in the product management space, this app lets you collect customer feedback from A to Z. Today, we’re mostly interested in the Ideas portion of the tool.

You can use Ideas to gather feedback from specific customers on specific pages and ask them how they feel about their experience. For example, you can ask free trial customers what’s preventing them from upgrading or trying out a new feature.

While online reviews generally state that ProductBoard is a bit complex to use and has a steep learning curve, this tool is a powerful way to close the feedback loop for SaaS products. Pricing starts at just $19 per month, and there is a free plan available to be used as well.

💡 You can also integrate ProductBoard with Survicate and easily run contextual surveys in your product. Then? Send responses to Productboard as notes. All to create products tailored to your customers' needs.

Customer.io

Communication is at the heart of marketing and this is exactly what Customer.io helps you with. This tool allows you to get in touch with your customers using various methods, from live chat to SMS, emails and in-app notifications. All you have to do is set up the app and the rest is taken care of.

Where Customer.io excels is segmentation.

Based on the actions your customers take and the information they give you, they are split up into super detailed segments so you can better market to them. In other words, personalized messaging at scale.

If it reminds you of Intercom, it sort of is like that but much cheaper. At $100 per month, you get up to 5,000 profiles in your dashboard, which is excellent value for money.

💡 To step it up further, integrate Customer.io with Survicate and collect actionable feedback directly from email campaigns. You can also automatically capture respondents’ details from Customer.io contact lists.

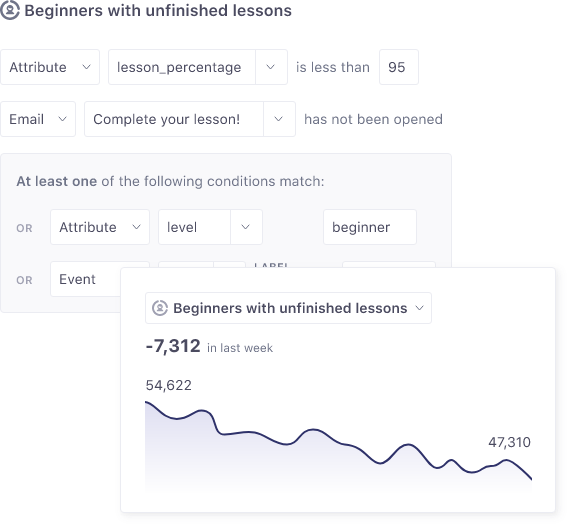

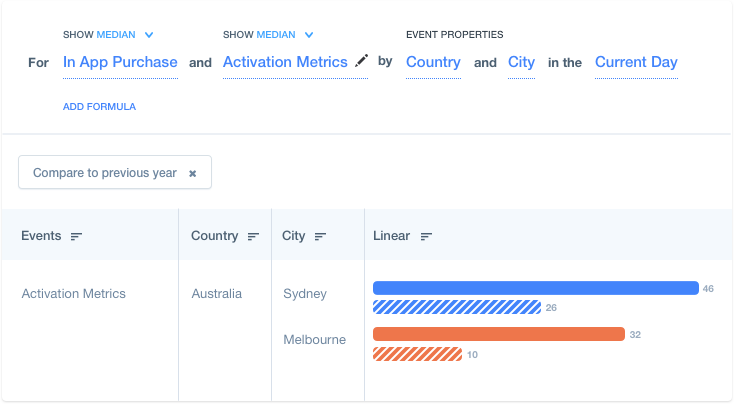

Mixpanel

Ever wanted to find out exactly what happens in your website and your product? Mixpanel does just that. Set it up and watch as patterns emerge when Mixpanel anayzes your website behavior. You can easily see where customers drop off and the app will throw suggestions your way about what’s gone wrong.

Besides analytics, you can also use it for super detailed segmentation based on any attribute you want, from the location of the visitor all the way to the browser being used. You can then use the segmented audiences for better targeting and improving your conversion rates.

There’s a very generous free plan allowing up to 10k session replays per month and you'll be able to save 5 of your reports per each seat added.

💡 If you decide to use Mixpanel, make sure to add Survicate to the mix to combine the raw data with more context coming directly from surveys. Survicate integrates with Mixpanel natively, allowing for easy toolstack combination.

Freshdesk

Omnichannel customer service is the name of the game in the years to come and Freshdesk is one of the top competitors in this space. It essentially allows you to communicate with one and the same customer across different platforms, seamlessly and all in one inbox.

Just set up Freshdesk once and you can chat to customers on the phone, through live chat, social media and email—and more. One contact, all the updates in one place. This not only allows for better personalization and better conversions but also saves precious time for your customer service agents.

There’s a free plan you can grab but don’t worry. Even if you have to pay, the $15 per month per agent is nothing compared to the personalization you can offer to your customers.

UseProof

There’s a good chance you’ve seen UseProof in action on a website before without knowing it. The basic premise of this tool is that people use social proof as a basis for purchasing decisions. In fact, they promise as much as a 15% increase in conversions with just 15 minutes of work.

UseProof shows your website visitors notifications based on the rules you set. For example, you can show them how many people bought a product they’re just looking at. You can also show them the locations of customers who recently downloaded a course of yours. In short, any kind of activity that happened on your website can be shown to other visitors as a form of social proof.

You can expect to pay $79 per month for the cheapest plan with up to 10,000 monthly visitors.

Zapier

Although technically speaking, Zapier is not a CRO application, it can be used for plenty of optimization activities. Your marketing tech stack consists of many apps and maybe some of them don’t have native integrations with each other. This is where Zapier comes into play.

It connects thousands of different applications from different industries and niches, basically—the sky is the limit here. For example, you can use one tool to split-test your landing page and then send the results from your opt-in tool to your CRM with Zapier if there is no immediate integration.

Zapier can be had for free if you run fewer than 100 tasks per month but you’ll quickly run out of that. Plans start at $19.99 monthly for 750 tasks which is a very good deal compared to the amount of time you can save.

Add the 'why' behind raw data and optimize CRO better

Conversions are the lifeblood of any online business and when you’re not working on increasing traffic, you should be working on improving your conversions. No matter how many changes happen in the platforms or marketing methods of the current day and age, the principles of conversion remain the same, and these tools can help you get more out of the traffic you already have.

And the best piece of advice we can share when it comes to CRO optimization—no matter which tool you decide to go for, it's best to always complement the raw data with more context.

Survicate we'll give you the option to not only collect and analyze customer feedback with multichannel surveys, but also connect it with third-party tools to nail CRO optimization. From Mixpanel to Google Analytics, Braze, or ProductBoard.

Why not start today?

With Survicate, you can find out exactly what you need to do to move the needle and get more revenue from your traffic. Sign up for your free trial to get started!

.webp)

.webp)